This weekend, I’m building a service that turns PDFs into chaptered, audiobook‑quality narration in minutes—upload, listen in a built‑in player, and download MP3/M4B files with clean metadata.

technically I can put the Bridge verificaiton code in my feed’s metadata so no-one really ever sees or notices it 🤔 Maybe I’ll add a first-class button/field thingy in yarnd so users can “register their feed” straight from their pod? 🤔

No, I was using an empty hash URL when the feed didn’t specify a url metadata. Now I’m correctly falling back to the feed URL.

whoo fix a long stnading bug with identicons for feeds with no avatar in their metadata

Hint:

# nick = ...

# avatar = ...

Oh, and I forgot (because I thought it was obvious, my bad), set a nick, and a url at the very minimum on your feed. See “Metadata Extension”.

Client ID Metadata Document Adopted by the OAuth Working Group

The IETF OAuth Working Group has adopted the Client ID Metadata Document specification! ⌘ Read more

Hello again everyone! A little update on my twtxt client.

I think it’s finally shaping a bit better now, but… ☝️

As I’m trying to put all the parts together, I decided to build multiple parallel UIs, to ensure I don’t accidentally create a structure that is more rigid than planned.

I already decided on a UI that I would want to use for myself, it would be inspired by moshidon, misskey and some other “social feeds” mock-ups I found on dribbble.

I also plan on building a raw HTML version (for anyone wanting to do a full DIY client).

I would love to get any suggestions of what you would like to see (and possibly use) as a client, by sharing a link, app/website name or even a sketch made by you on paper.

I think I’ll pick a third and maybe a fourth design to build together with the two already mentioned.

For reference, the screens I think of providing are (some might be optional or conditionally/manually hidable):

- Global / personal timeline screen

- Profile screen (with timeline)

- Thread screen

- Notifications screen or popup (both valid)

- DM list & chat screens (still planning, might come later)

- Settings screen (it’ll probably be a hard coded form, but better mention it)

- Publish / edit post screen or popup (still analysing some use cases, as some “engines” might not have direct publishing support)

I also plan on adding two optional metadata fields:

display_name: To show a human readable alternative for a nick, it fallback tonickif not defined

banner: Using the same format asavatarbut the image expected is wider, inspired by other socials around

I also plan on supporting any metadata provided, including a dynamically parsable regex rule format for those extra fields, this should allow anyone to build new clients that don’t limit themselves to just the social aspect of twtxt, hoping to see unique ways of using twtxt! 🤞

is the first url metadata field unequivocally treated as the canon feed url when calculating hashes, or are they ignored if they’re not at least proper urls? do you just tolerate it if they’re impersonating someone else’s feed, or pointing to something that isn’t even a feed at all?

and if the first url metadata field changes, should it be logged with a time so we can still calculate hashes for old posts? or should it never be updated? (in the case of a pod, where the end user has no choice in how such events are treated) or do we redirect all the old hashes to the new ones (probably this, since it would be helpful for edits too)

I think I’m just about ready to go live with my new blog (migrated from MicroPub). I just finished migrating all of the content over, fixing up metadata, cleaning up, migrating media, optimizing media.

The new blog for prologic.blog soon to be powered by zs using the zs-blog-template is coming along very nicely 👌 It was actually pretty easy to do the migration/conversation in the end. The results are not to shabby either.

Before:

- ~50MB repo

- ~267 files

After:

- ~20MB repo

- ~88 files

@bender@twtxt.net Ahh yes I see what you mean. no indicate of when the post was made right? That should be ideally displayed on the page somewhere? Would you expect it in the url as well, because not having /posts/yyyy/mm/dd/.... was actually intentional. But yeah I should figure out where to put some additional metadata on the page.

nicks? i remember reading somewhere whitespace should not be allowed, but i don't see it in the spec on twtxt.dev — in fact, are there any other resources on twtxt extensions outside of twtxt.dev?

@zvava@twtxt.net Good question. This is the spec, I think:

https://twtxt.dev/exts/metadata.html#nick

It doesn’t say much. 🤔

In the wild, I’ve only seen “traditional” nick names, i.e. ASCII 0x21 thru 0x7E.

My client removes anything but r'[a-zA-Z0-9]' from nick names.

at first i dismissed the idea of likes on twtxt as not sensible…like at all — then i considered they could just be published in a metadata field (though that field could get really unruly after a while)

retwts are plausible, as “RE: https://example.com/twtxt.txt#abcdefg”, the hash could even be the original timestamp from the feed to make it human readable/writable, though im extremely wary of clogging up timelines

i thought quote twts could be done extremely sensibly, by interpreting a mention+hash at the end of the twt differently to when placed at the beginning — but the twt subject extension requires it be at the beginning, so the clean fallback to a normal reply i originally imagined is out of the question — it could still be possible (reusing the retwt format, just like twitter!) but i’m not convinced it’s worth it at that point

is any of this in the spirit of twtxt? no, not in the slightest, lmao

# url = field in your feed. I'm not sure if you had already, but the first url field is kind of important in your feed as it is used as the "Hashing URI" for threading.

@prologic@twtxt.net @movq@www.uninformativ.de My metadata only has my HTTPS URL. I didn’t consider having multiple. I was talking about my config.yaml. Jenny sounds like a good client, so I might give that a try.

@dce@hashnix.club Ah, oh, well then. 🥴

My client supports that, if you set multiple url = fields in your feed’s metadata (the top-most one must be the “main” URL, that one is used for hashing).

But yeah, multi-protocol feeds can be problematic and some have considered it a mistake to support them. 🤔

@prologic@twtxt.net Yeah, this really could use a proper definition or a “manifest”. 😅 Many of these ideas are not very wide spread. And I haven’t come across similar projects in all these years.

Let’s take the farbfeld image format as an example again. I think this captures the “spirit” quite well, because this isn’t even about code.

This is the entire farbfeld spec:

farbfeld is a lossless image format which is easy to parse, pipe and compress. It has the following format:

╔════════╤═════════════════════════════════════════════════════════╗

║ Bytes │ Description ║

╠════════╪═════════════════════════════════════════════════════════╣

║ 8 │ "farbfeld" magic value ║

╟────────┼─────────────────────────────────────────────────────────╢

║ 4 │ 32-Bit BE unsigned integer (width) ║

╟────────┼─────────────────────────────────────────────────────────╢

║ 4 │ 32-Bit BE unsigned integer (height) ║

╟────────┼─────────────────────────────────────────────────────────╢

║ [2222] │ 4x16-Bit BE unsigned integers [RGBA] / pixel, row-major ║

╚════════╧═════════════════════════════════════════════════════════╝

The RGB-data should be sRGB for best interoperability and not alpha-premultiplied.

(Now, I don’t know if your screen reader can work with this. Let me know if it doesn’t.)

I think these are some of the properties worth mentioning:

- The spec is extremely short. You can read this in under a minute and fully understand it. That alone is gold.

- There are no “knobs”: It’s just a single version, it’s not like there’s also an 8-bit color depth version and one for 16-bit and one for extra large images and one that supports layers and so on. This makes it much easier to implement a fully compliant program.

- Despite being so simple, it’s useful. I’ve used it in various programs, like my window manager, my status bars, some toy programs like “tuxeyes” (an Xeyes variant), or Advent of Code.

- The format does not include compression because it doesn’t need to. Just use something like bzip2 to get file sizes similar to PNG.

- It doesn’t cover every use case under the sun, but it does cover the most important ones (imho). They have discussed using something other than RGBA and decided it’s not worth the trouble.

- They refrained from adding extra baggage like metadata. It would have needlessly complicated things.

SSRF via PDF Generator? Yes, and It Led to EC2 Metadata Access

👨💻Free Article Link

[Continue reading on InfoSec Write-ups »](https://infosecwriteups.com/ssrf-via-pdf-generator-yes-and-it-led-to-ec2-metadata-access-39b8e5b41840 … ⌘ Read more

How to Clear CoreSpotlight Metadata on Mac When Taking Up Large Amounts of Storage

Spotlight is the powerful search engine built into MacOS that allows you to quickly find any file or data on your Mac disk drives. Part of what makes Spotlight so fast is that it uses caches and temporary files during indexing to quickly refer to data on your Mac, but sometimes those Spotlight files can … Read More ⌘ Read more

dm-only.txt feeds. 😂

by commenting out DMs are you giving up on simplicity? See the Metadata extension holding the data inside comments, as the client doesn’t need to show it inside the timeline.

I don’t think that commenting out DMs as we are doing for metadata is giving up on simplicity (it’s a feature already), and it helps to hide unwanted DMs to clients that will take months to add it’s support to something named… an extension.

For some other extensions in https://twtxt.dev/extensions.html (for example the reply-to hash #abcdfeg or the mention @ < example http://example.org/twtxt.txt >) is not a big deal. The twt is still understandable in plain text.

For DM, it’s only interesting for you if you are the recipient, otherwise you see an scrambled message like 1234567890abcdef=. Even if you see it, you’ll need some decryption to read it. I’ve said before that DMs shouldn’t be in the same section that the timeline as it’s confusing.

So my point stands, and as I’ve said before, we are discussing it as a community, so let’s see what other maintainers add to the convo.

I think that having a dm-only.txt, twtxt-dm.txt or any other name for an alternative file is the most sensible approach. The name could be specified in the metadata.

@prologic@twtxt.net Spring cleanup! That’s one way to encourage people to self-host their feeds. :-D

Since I’m only interested in the url metadata field for hashing, I do not keep any comments or metadata for that matter, just the messages themselves. The last time I fetched was probably some time yesterday evening (UTC+2). I cannot tell exactly, because the recorded last fetch timestamp has been overridden with today’s by now.

I dumped my new SQLite cache into: https://lyse.isobeef.org/tmp/backup.tar.gz This time maybe even correctly, if you’re lucky. I’m not entirely sure. It took me a few attempts (date and time were separated by space instead of T at first, I normalized offsets +00:00 to Z as yarnd does and converted newlines back to U+2028). At least now the simple cross check with the Twtxt Feed Validator does not yield any problems.

hmm i need to start storing feed preambles so i can capture metadata like that

hmm i need to start storing feed preambles so i can capture metadata like that

(#zrsxk3a) @bender@bender I never implemented it actually. That’s why we have the # refresh = metadata field for those that yell loudly en …

@bender @twtxt.net I never implemented it actually. That’s why we have the # refresh = metadata field for those that yell loudly enough can add to their feeds. Otherwise yarnd uses WebSub between pods and is fairly dumb. I could never find an “intelligent” way to back-off without hurting freshness. ⌘ Read more

@eapl.me@eapl.me I think the benefits do not outweigh the disadvantages. Clients would have to read and merge the information from 2 txt and a new metadata would have to be added with the address of this file.

Also, it is very easy to filter or ignore it.

Introducing rpi-image-gen for customized Raspberry Pi images

Raspberry Pi has

announced rpi-image-gen,

a tool to create custom software images for its devices.

rpi-image-gen is a Bash orientated scripting engine capable of

producing software images with different on-disk partition layouts,

file systems and profiles using collections of metadata and a defined

flow of execution. It provides the means to create a hig … ⌘ Read more

lang=en @xuu@txt.sour.is gotcha!

From that PR #17 I think it was reverted? We could discuss about metadata later this month, as it seems that I’m the only person using it.

I’ve added a [lang=en] to this twt to see current yarn behaviour.

For point 1 and others using the metadata tags. we have implemented them in yarnd as [lang=en][meta=data]

For point 1 and others using the metadata tags. we have implemented them in yarnd as [lang=en][meta=data]

@doesnm@doesnm.p.psf.lt Threema is also known to be crippled to state actors and the five eyes. It has known crypto protocol weaknesses that can leak metadata.

(#c2yjk6q) @doesnm@doesnm Threema is also known to be crippled to state actors and the five eyes. It has known crypto protocol weakness …

@doesnm @doesnm.p.psf.lt Threema is also known to be crippled to state actors and the five eyes. It has known crypto protocol weaknesses that can leak metadata. ⌘ Read more

I haven’t read the entire specification, but I think there is a fundamental design problem. Why would someone put an encrypted message on a public feed that is completely useless to everybody other than the one recipient? This doesn’t make sense to me. It of course depends on the threat model, but wouldn’t one also want to minimize the publicly visible metadata (who is communicating with whom and when) when privately messaging? I feel there are better ways to accomplish this. Sorry, if I miss the obvious use case, please let me know. :-)

although I agree that it helps, I don’t see completely correct to leave the nick definition to the source .txt. It could be wrong from the start or outdated with the time.

I’d rather prefer to get it from the mentioned .txt nick metadata (could be cached for performance).

So my vote would to make it mandatory to follow @<name url> but only using that name/nick if the URL doesn’t contain another nick.

A main advantage is that when the destination URL changes the nick, it’ll be automagically updated in the thread view (as happens with some other microblogging platforms, following the Jakob’s Law)

I’m still making progress with the Emacs client. I’m proud to say that the code that is responsible for reading the feeds is almost finished, including: Twt Hash Extension, Twt Subject Extension, Multiline Extension and Metadata Extension. I’m fine-tuning some tests and will soon do the first buffer that displays the twts.

Lol, seems yarn do not display metadata on @terron@duque-terron.cat

nick = _compared to just not defining a nick and let the client use the domain as the handle?

You are right: no advantage. Also your method can make backward compatible to feeds which doesn’t implement metadata extension

Introducing Annotated Logger: A Python package to aid in adding metadata to logs

We’re open sourcing Annotated Logger, a Python package that helps make logs searchable with consistent metadata.

The post [Introducing Annotated Logger: A Python package to aid in adding metadata to logs](https://github.blog/developer-skills/programming-languages-and-frameworks/introducing-annotated-logger-a-python-package-to-aid-in-a … ⌘ Read more

The Chinese hack that has Australia on high alert

A notorious Chinese hacking group has stolen a vast amount of Americans’ metadata, and Australian officials are worried. ⌘ Read more

Lol, metadata extension should be optional for backward-compability

@eapl.me@eapl.me Neat.

So for twt metadata the lextwt parser currently supports values in the form [key=value]

https://git.mills.io/yarnsocial/go-lextwt/src/branch/main/parser_test.go#L692-L698

@eapl.me@eapl.me Neat.

So for twt metadata the lextwt parser currently supports values in the form [key=value]

https://git.mills.io/yarnsocial/go-lextwt/src/branch/main/parser_test.go#L692-L698

@eapl.me@eapl.me here are my replies (somewhat similar to Lyse’s and James’)

Metadata in twts: Key=value is too complicated for non-hackers and hard to write by hand. So if there is a need then we should just use #NSFS or the alt-text file in markdown image syntax

if something is NSFWIDs besides datetime. When you edit a twt then you should preserve the datetime if location-based addressing should have any advantages over content-based addressing. If you change the timestamp the its a new post. Just like any other blog cms.

Caching, Yes all good ideas, but that is more a task for the clients not the serving of the twtxt.txt files.

Discovery: User-agent for discovery can become better. I’m working on a wrapper script in PHP, so you don’t need to go to Apaches log-files to see who fetches your feed. But for other Gemini and gopher you need to relay on something else. That could be using my webmentions for twtxt suggestion, or simply defining an email metadata field for letting a person know you follow their feed. Interesting read about why WebMetions might be a bad idea. Twtxt being much simple that a full featured IndieWeb sites, then a lot of the concerns does not apply here. But that’s the issue with any open inbox. This is hard to solve without some form of (centralized or community) spam moderation.

Support more protocols besides http/s. Yes why not, if we can make clients that merge or diffident between the same feed server by multiples URLs

Languages: If the need is big then make a separate feed. I don’t mind seeing stuff in other langues as it is low. You got translating tool if you need to know whats going on. And again when there is a need for easier switching between posting to several feeds, then it’s about building clients with a UI that makes it easy. No something that should takes up space in the format/protocol.

Emojis: I’m not sure what this is about. Do you want to use emojis as avatar in CLI clients or it just about rendering emojis?

Righto, @eapl.me@eapl.me, ta for the writeup. Here we go. :-)

Metadata on individual twts are too much for me. I do like the simplicity of the current spec. But I understand where you’re coming from.

Numbering twts in a feed is basically the attempt of generating message IDs. It’s an interesting idea, but I reckon it is not even needed. I’d simply use location based addressing (feed URL + ‘#’ + timestamp) instead of content addressing. If one really wanted to, one could hash the feed URL and timestamp, but the raw form would actually improve disoverability and would not even require a richer client. But the majority of twtxt users in the last poll wanted to stick with content addressing.

yarnd actually sends If-Modified-Since request headers. Not only can I observe heaps of 304 responses for yarnds in my access log, but in Cache.FetchFeeds(…) we can actually see If-Modified-Since being deployed when the feed has been retrieved with a Last-Modified response header before: https://git.mills.io/yarnsocial/yarn/src/commit/98eee5124ae425deb825fb5f8788a0773ec5bdd0/internal/cache.go#L1278

Turns out etags with If-None-Match are only supported when yarnd serves avatars (https://git.mills.io/yarnsocial/yarn/src/commit/98eee5124ae425deb825fb5f8788a0773ec5bdd0/internal/handlers.go#L158) and media uploads (https://git.mills.io/yarnsocial/yarn/src/commit/98eee5124ae425deb825fb5f8788a0773ec5bdd0/internal/media_handlers.go#L71). However, it ignores possible etags when fetching feeds.

I don’t understand how the discovery URLs should work to replace the User-Agent header in HTTP(S) requests. Do you mind to elaborate?

Different protocols are basically just a client thing.

I reckon it’s best to just avoid mixing several languages in one feed in the first place. Personally, I find it okay to occasionally write messages in other languages, but if that happens on a more regularly basis, I’d definitely create a different feed for other languages.

Isn’t the emoji thing “just” a client feature? So, feed do not even have to state any emojis. As a user I’d configure my client to use a certain symbol for feed ABC. Currently, I can do a similar thing in tt where I assign colors to feeds. On the other hand, what if a user wants to control what symbol should be displayed, similar to the feed’s nick? Hmm. But still, my terminal font doesn’t even render most of emojis. So, Unicode boxes everywhere. This makes me think it should actually be a only client feature.

description header. Or rather, how often it re-fetches it.

So, @prologic@twtxt.net, Yarn isn’t rendering the metadata as described on the format documentation. That is, ux2028 is ignored when Yarn renders the description metadata.

👋 PR to Update Metadata ext to clarify avatar field cc @lyse @movq @sorenpeter and @Codebuzz

👋 PR to Update Metadata ext to clarify avatar field cc @lyse @lyse.isobeef.org @movq @www.uninformativ.de @sorenpeter @darch.dk and [@Codebuzz _@www.co … ⌘ Read more

Web interface is deleted in https://git.mills.io/saltyim/saltyim/commit/376de2702319686c902ec03b8ca1e17b020fc639 but seems incorrectly (in source i see git lfs metadata). Can be builded if you grab https://git.mills.io/saltyim/saltyim/src/commit/15a64de82829/internal/web/app.wasm and place it in source (go directory has cached source) and rebuild

@2024-10-08T19:36:38-07:00@a.9srv.net Thanks for the followup. I agrees with most of it - especially:

Please nobody suggest sticking the content type in more metadata. 🙄

Yes, URL can be considered ugly, but they work and are understandable by both humans and machines. And its trivial for any client to hide the URLs used as reference in replies/treading.

Webfinger can be an add-on to help lookup people, and it can be made independent of the nick by just serving the same json regardless of the nick as people do with static sites and a as I implemented it on darch.dk (wf endpoint). Try RANDOMSTRING@darch.dk on http://darch.dk/wf-lookup.php (wf lookup) or RANDOMSTRING@garrido.io on https://webfinger.net

Yes, that is exactly what I meant. I like that collection and “twtxt v2” feels like a departure.

Maybe there’s an advantage to grouping it into one spec, but IMO that shouldn’t be done at the same time as introducing new untested ideas.

See https://yarn.social (especially this section: https://yarn.social/#self-host) – It really doesn’t get much simpler than this 🤣

Again, I like this existing simplicity. (I would even argue you don’t need the metadata.)

That page says “For the best experience your client should also support some of the Twtxt Extensions…” but it is clear you don’t need to. I would like it to stay that way, and publishing a big long spec and calling it “twtxt v2” feels like a departure from that. (I think the content of the document is valuable; I’m just carping about how it’s being presented.)

Good writeup, @anth@a.9srv.net! I agree to most of your points.

3.2 Timestamps: I feel no need to mandate UTC. Timezones are fine with me. But I could also live with this new restriction. I fail to see, though, how this change would make things any easier compared to the original format.

3.4 Multi-Line Twts: What exactly do you think are bad things with multi-lines?

4.1 Hash Generation: I do like the idea with with a new uuid metadata field! Any thoughts on two feeds selecting the same UUID for whatever reason? Well, the same could happen today with url.

5.1 Reply to last & 5.2 More work to backtrack: I do not understand anything you’re saying. Can you rephrase that?

8.1 Metadata should be collected up front: I generally agree, but if the uuid metadata field were a feed URL and no real UUID, there should be probably an exception to change the feed URL mid-file after relocation.

@david@collantes.us Thanks, that’s good feedback to have. I wonder to what extent this already exists in registry servers and yarn pods. I haven’t really tried digging into the past in either one.

How interested would you be in changes in metadata and other comments in the feeds? I’m thinking of just permanently saving every version of each twtxt file that gets pulled, not just the twts. It wouldn’t be hard to do (though presenting the information in a sensible way is another matter). Compression should make storage a non-issue unless someone does something weird with their feed like shuffle the comments around every time I fetch it.

() @falsifian@www.falsifian.org You mean the idea of being able to inline

# url =changes in your feed?

Yes, that one. But @lyse@lyse.isobeef.org pointed out suffers a compatibility issue, since currently the first listed url is used for hashing, not the last. Unless your feed is in reverse chronological order. Heh, I guess another metadata field could indicate which version to use.

Or maybe url changes could somehow be combined with the archive feeds extension? Could the url metadata field be local to each archive file, so that to switch to a new url all you need to do is archive everything you’ve got and start a new file at the new url?

I don’t think it’s that likely my feed url will change.

@prologic@twtxt.net Some criticisms and a possible alternative direction:

Key rotation. I’m not a security person, but my understanding is that it’s good to be able to give keys an expiry date and replace them with new ones periodically.

It makes maintaining a feed more complicated. Now instead of just needing to put a file on a web server (and scan the logs for user agents) I also need to do this. What brought me to twtxt was its radical simplicity.

Instead, maybe we should think about a way to allow old urls to be rotated out? Like, my metadata could somehow say that X used to be my primary URL, but going forward from date D onward my primary url is Y. (Or, if you really want to use public key cryptography, maybe something similar could be used for key rotation there.)

It’s nice that your scheme would add a way to verify the twts you download, but https is supposed to do that anyway. If you don’t trust https to do that (maybe you don’t like relying on root CAs?) then maybe your preferred solution should be reflected by your primary feed url. E.g. if you prefer the security offered by IPFS, then maybe an IPNS url would do the trick. The fact that feed locations are URLs gives some flexibility. (But then rotation is still an issue, if I understand ipns right.)

@bender@twtxt.net Yes, they do 🤣 Implicitly, or threading would never work at all 😅 Nor lookups 🤣 They are used as keys. Think of them like a primary key in a database or index. I totally get where you’re coming from, but there are trade-offs with using Message/Thread Ids as opposed to Content Addressing (like we do) and I believe we would just encounter other problems by doing so.

My money is on extending the Twt Subject extension to support more (optional) advanced “subjects”; i.e: indicating you edited a Twt you already published in your feed as @falsifian@www.falsifian.org indicated 👌

Then we have a secondary (bure much rarer) problem of the “identity” of a feed in the first place. Using the URL you fetch the feed from as @lyse@lyse.isobeef.org ’s client tt seems to do or using the # url = metadata field as every other client does (according to the spec) is problematic when you decide to change where you host your feed. In fact the spec says:

Users are advised to not change the first one of their urls. If they move their feed to a new URL, they should add this new URL as a new url field.

See Choosing the Feed URL – This is one of our longest debates and challenges, and I think (_I suspect along with @xuu@txt.sour.is _) that the right way to solve this is to use public/private key(s) where you actually have a public key fingerprint as your feed’s unique identity that never changes.

All this hash breakage made me wonder if we should try to introduce “message IDs” after all. 😅

But the great thing about the current system is that nobody can spoof message IDs. 🤔 When you think about it, message IDs in e-mails only work because (almost) everybody plays fair. Nothing stops me from using the same Message-ID header in each and every mail, that would break e-mail threading all the time.

In Yarn, twt hashes are derived from twt content and feed metadata. That is pretty elegant and I’d hate see us lose that property.

If we wanted to allow editing twts, we could do something like this:

2024-09-05T13:37:40+00:00 (~mp6ox4a) Hello world!

Here, mp6ox4a would be a “partial hash”: To get the actual hash of this twt, you’d concatenate the feed’s URL and mp6ox4a and get, say, hlnw5ha. (Pretty similar to the current system.) When people reply to this twt, they would have to do this:

2024-09-05T14:57:14+00:00 (~bpt74ka) (<a href="https://txt.sour.is/search?q=%23hlnw5ha">#hlnw5ha</a>) Yes, hello!

That second twt has a partial hash of bpt74ka and is a reply to the full hash hlnw5ha. The author of the “Hello world!” twt could then edit their twt and change it to 2024-09-05T13:37:40+00:00 (~mp6ox4a) Hello friends! or whatever. Threading wouldn’t break.

Would this be worth it? It’s certainly not backwards-compatible. 😂

@prologic@twtxt.net Fair enough! I just added some metadata.

@stigatle@yarn.stigatle.no / @abucci@anthony.buc.ci My current working theory is that there is an asshole out there that has a feed that both your pods are fetching with a multi-GB avatar URL advertised in their feed’s preamble (metadata). I’d love for you both to review this PR, and once merged, re-roll your pods and dump your respective caches and share with me using https://gist.mills.io/

Thanks for your feedback @lyse@lyse.isobeef.org. For some reason i missed it until now. For now I have implemented endpoint discovery for #webmentions as a metadata field in the twtxt.txt like this:

# webmention = http://darch.dk/timeline/webmention

Linus Torvalds Has ‘Robust Exchanges’ Over Filesystem Suggestion on Linux Kernel Mailing List

Linus Torvalds had “some robust exchanges” on the Linux kernel mailing list with a contributor from Google. The subject was inodes, notes the Register, “which as Red Hat puts it are each ‘a unique identifier for a specific piece of metadata on a given filesystem.’”

Inodes have been the subj … ⌘ Read more

I’ve been thinking of how to notify someone else that you’ve replied to their twts.

Is there something already developed, for example on yarn.social?

Let’s say I want to notify https://sour.is/tiktok/America/Denver.txt that I’ve replied to some twt. They don’t follow me back, so they won’t see my reply.

I would send my URL to, could be, https://sour.is/tiktok/replies?url=MY_URL and they’ll check that I have a reply to some of their twts, and could decide to follow me back (after seeing my twtxt profile to avoid spam)

Another option could be having a metadata like

follow-request=https://sour.is/tiktok/America/Denver.txt TIMESTAMP_IN_SECONDS

that the other client has to look for, to ensure that the request comes from that URL (again, to avoid spam)

This could be deleted after the other .txt has your URL in the follow list, or auto-expire after X days to clean-up old requests.

What do you think?

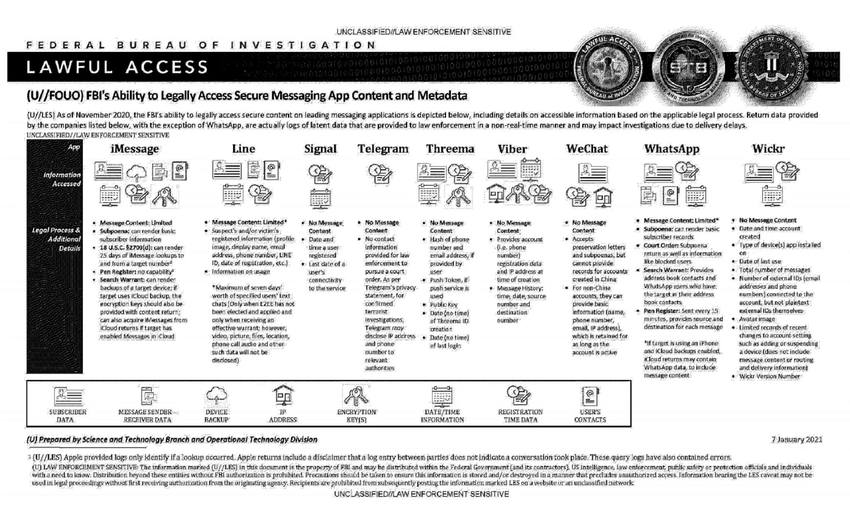

An official FBI document dated January 2021, obtained by the American association “Property of People” through the Freedom of Information Act.

This document summarizes the possibilities for legal access to data from nine instant messaging services: iMessage, Line, Signal, Telegram, Threema, Viber, WeChat, WhatsApp and Wickr. For each software, different judicial methods are explored, such as subpoena, search warrant, active collection of communications metadata (“Pen Register”) or connection data retention law (“18 USC§2703”). Here, in essence, is the information the FBI says it can retrieve:

Apple iMessage: basic subscriber data; in the case of an iPhone user, investigators may be able to get their hands on message content if the user uses iCloud to synchronize iMessage messages or to back up data on their phone.

Line: account data (image, username, e-mail address, phone number, Line ID, creation date, usage data, etc.); if the user has not activated end-to-end encryption, investigators can retrieve the texts of exchanges over a seven-day period, but not other data (audio, video, images, location).

Signal: date and time of account creation and date of last connection.

Telegram: IP address and phone number for investigations into confirmed terrorists, otherwise nothing.

Threema: cryptographic fingerprint of phone number and e-mail address, push service tokens if used, public key, account creation date, last connection date.

Viber: account data and IP address used to create the account; investigators can also access message history (date, time, source, destination).

WeChat: basic data such as name, phone number, e-mail and IP address, but only for non-Chinese users.

WhatsApp: the targeted person’s basic data, address book and contacts who have the targeted person in their address book; it is possible to collect message metadata in real time (“Pen Register”); message content can be retrieved via iCloud backups.

Wickr: Date and time of account creation, types of terminal on which the application is installed, date of last connection, number of messages exchanged, external identifiers associated with the account (e-mail addresses, telephone numbers), avatar image, data linked to adding or deleting.

TL;DR Signal is the messaging system that provides the least information to investigators.

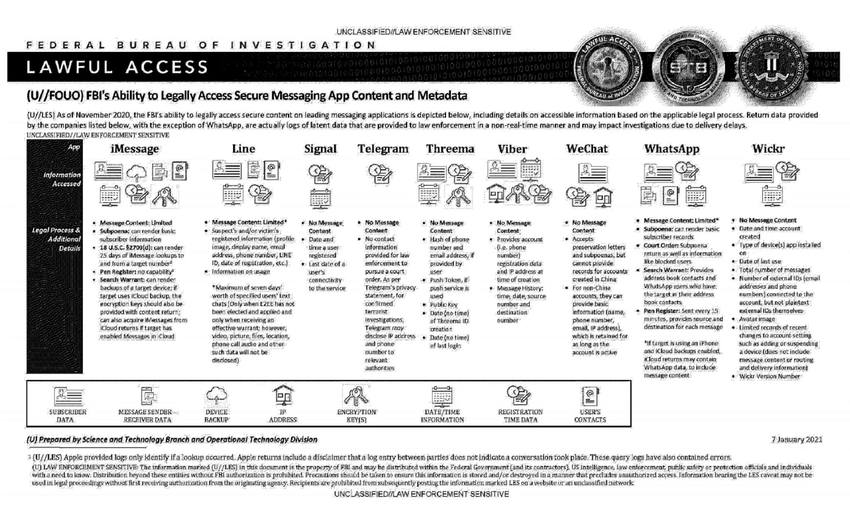

An official FBI document dated January 2021, obtained by the American association “Property of People” through the Freedom of Information Act.

This document summarizes the possibilities for legal access to data from nine instant messaging services: iMessage, Line, Signal, Telegram, Threema, Viber, WeChat, WhatsApp and Wickr. For each software, different judicial methods are explored, such as subpoena, search warrant, active collection of communications metadata (“Pen Register”) or connection data retention law (“18 USC§2703”). Here, in essence, is the information the FBI says it can retrieve:

Apple iMessage: basic subscriber data; in the case of an iPhone user, investigators may be able to get their hands on message content if the user uses iCloud to synchronize iMessage messages or to back up data on their phone.

Line: account data (image, username, e-mail address, phone number, Line ID, creation date, usage data, etc.); if the user has not activated end-to-end encryption, investigators can retrieve the texts of exchanges over a seven-day period, but not other data (audio, video, images, location).

Signal: date and time of account creation and date of last connection.

Telegram: IP address and phone number for investigations into confirmed terrorists, otherwise nothing.

Threema: cryptographic fingerprint of phone number and e-mail address, push service tokens if used, public key, account creation date, last connection date.

Viber: account data and IP address used to create the account; investigators can also access message history (date, time, source, destination).

WeChat: basic data such as name, phone number, e-mail and IP address, but only for non-Chinese users.

WhatsApp: the targeted person’s basic data, address book and contacts who have the targeted person in their address book; it is possible to collect message metadata in real time (“Pen Register”); message content can be retrieved via iCloud backups.

Wickr: Date and time of account creation, types of terminal on which the application is installed, date of last connection, number of messages exchanged, external identifiers associated with the account (e-mail addresses, telephone numbers), avatar image, data linked to adding or deleting.

TL;DR Signal is the messaging system that provides the least information to investigators.

Metadata from a single picture can destroy your privacy

What someone can learn from your Image EXIF Metadata, and how to secure your photos ⌘ Read more

Dependabot relieves alert fatigue from npm devDependencies

A new alert rules engine for Dependabot leverages alert metadata to identify and auto-dismiss up to 15% of alerts as false positives. ⌘ Read more

💡 Quick ‘n Dirty prototype Yarn.social protocol/spec:

If we were to decide to write a new spec/protocol, what would it look like?

Here’s my rough draft (back of paper napkin idea):

- Feeds are JSON file(s) fetchable by standard HTTP clients over TLS

- WebFinger is used at the root of a user’s domain (or multi-user) lookup. e.g:

prologic@mills.io->https://yarn.mills.io/~prologic.json

- Feeds contain similar metadata that we’re familiar with: Nick, Avatar, Description, etc

- Feed items are signed with a ED25519 private key. That is all “posts” are cryptographically signed.

- Feed items continue to use content-addressing, but use the full Blake2b Base64 encoded hash.

- Edited feed items produce an “Edited” item so that clients can easily follow Edits.

- Deleted feed items produced a “Deleted” item so that clients can easily delete cached items.

**RT by @mind_booster: My new hobby: finding public domain images that Getty sells for $500, locating hi-rez scans of their original publications, cropping and cleaning them up, adding metadata, and uploading them to Wikimedia Commons.

First one: https://commons.wikimedia.org/wiki/File:Fig_6_Le_Telephone_by_T_De_Moncel_Paris_1878.png**

My new hobby: finding public domain images that Getty sells for $500, locating hi-rez scans of their original publications, cropping and cleaning them up, adding metadata, and uplo … ⌘ Read more

GitHub Security Lab audited DataHub: Here’s what they found

The GitHub Security Lab audited DataHub, an open source metadata platform, and discovered several vulnerabilities in the platform’s authentication and authorization modules. These vulnerabilities could have enabled an attacker to bypass authentication and gain access to sensitive data stored on the platform. ⌘ Read more

@prologic@twtxt.net: I understand the benefits of using hashes, it’s much easier to implement client applications (at the expense of ease of use without the proper client). I must say that I like the way the metadata extension is done. Simple and elegant! It’s hard to design simple things!

“Bloggers, Dump Your Twitter Card Tags”

It’s crazy to think how much bandwidth is being used by metadata tags. Every company wants to invent it’s own new system. Wouter Groeneveld gives a brief overview and recommends getting rid of them (for the most part). I agree with him completely. The only one of these systems that my blog supports is Microformats, which is quite popular among the IndieWeb community. ⌘ Read more

Dino: Stateless File Sharing: Source Attachment and Wrap-Up

RecapStateless file sharing (sfs) is a generic file sharing message which, alongside metadata, sends a list of sources where the file can be retrieved from.

It is generic in the sense, that sources can be from different kinds of file transfer methods.

HTTP, Jingle and any other file transfers can be encapsulated with it.

The big idea is that functionality can be implemented for all file transfer methods at once, thanks to … ⌘ Read more

Dino: Stateless File Sharing: Async, Metadata with Thumbnails and some UI

AsyncAsynchronous programming is a neat tool, until you work with a foreign project in a foreign language using it.

As a messenger, Dino uses lots of asynchronous code, not always though.

Usually my progress wasn’t interfered by such instances, but sometimes I had to work around it.

Async in Vala

No surprises here.

Functions are annotated with async, and yield expressions that are asyn … ⌘ Read more

@prologic@twtxt.net I have added that to my twtxt file. Even if I can’t see the metadata the people from yarn can. Not a big deal to add the metadata and it helps yarn users

@prologic@twtxt.net I don’t think Jenny does much of anything with the avatar and description but I do know yarn does and its not a bad thing to include the metadata for those users.

Dino: Stateless File Sharing: Base implementation

The last few weeks were quite busy for me, but there was also a lot of progress.

I’m happy to say that the base of stateless file sharing is implemented and working.

Let’s explore some of the more interesting topics.

File hashes have some practical applications, such as file validation and duplication detection.

As such, they are part of the [metadata element](https://xmpp.org/extensio … ⌘ Read more

Just reading in-depth and trying to understand the security model of Delta.Chat a bit more… There’s a few things that really concern me about how Delta.Chat which relies on Autocrypt work:

- There is no Perfect Forward Secrecy

- No verification of keys

- Is therefore susceptible to Man-in-the-Middle attacks

- Is therefore susceptible to Man-in-the-Middle attacks

- Metadata is a BIG problem with Delta.Chat:

- The

ToandFromandDateare trackable by your Mail provider (amongst many other headers)

- The

Hmmm 🤔 cc @deebs@twtxt.net

@movq@www.uninformativ.de

I would recommend a longer rotation, perhaps? The way I see it, you are proposing a monthly one. That can make metadata huge too. Maybe yearly, or every 6 months?

@xuu@txt.sour.is Btw… I noticed your pod has some changed I’m not familiar with, for example you seem to have added metadata to the top of feeds. Can you enumerate the improvements/changes you’ve made and possibly let’s discuss contributing them back upstream? :D

It’s a new one in the instant messaging game :P https://getsession.org It’s a fork of Signal and claims no metadata. I too have my chatting tools, but it doesn’t prevent to check new stuff ^^

Metadata: Judoing the Dunning-Kruger effect: the “surprisingly-popular option” strategy for crowdsourcing https://muratbuffalo.blogspot.com/2018/11/judoing-dunning-kruger-effect.html

GitHub - jimkang/random-internet-archive: Gets a random Internet Archive resource, in URL form with some metadata. https://github.com/jimkang/random-internet-archive

@mdom@domgoergen.com metadata is there now. I was one commit behind.

@sdk@codevoid.de Great! Though i don’t see any metadata on your feed?

@sdk@codevoid.de Great! Though i don’t see any metadata on your feed?

@mdom@domgoergen.com That’s interesting. So does txtnish read that metadata? or would an end user just look at the file to see it? Is the meta data going to be the standard?

@mdom@domgoergen.com what metadata feature is that?

New feature for txtnish: After setting add_metadata to 1, txtnish will, uhm, add metadata to your twtfile. Currently i only add followings, client and your gpg fingerprint. See my file for an example.

New feature for txtnish: After setting add_metadata to 1, txtnish will, uhm, add metadata to your twtfile. Currently i only add followings, client and your gpg fingerprint. See my file for an example.

The workflow app on iOS is magic. I now have a button that asks me to select a picture, then converts it to png, resizes it, strips the metadata, scps it to my jumphost, scps it further to my gopher jail and into my paste directory, constructs the http proxy URL and opens it in safari. All without user-interaction. Now I can share my mobile life with you guys! Prepare for cat pictures!

updated metadata on twtxt.txt file

Metadata: Paper review. IPFS: Content addressed, versio… http://muratbuffalo.blogspot.com/2018/02/paper-review-ipfs-content-addressed.html

@reednj@twtxt.xyz I think this would be the first time two clients implement the same #metadata format.

@reednj@twtxt.xyz I think this would be the first time two clients implement the same #metadata format.

I’ll take another swing at #metadata for #twtxt. You can check my feed for an example. The headers aren’t important, only # key = value

I’ll take another swing at #metadata for #twtxt. You can check my feed for an example. The headers aren’t important, only # key = value

Maybe we shouldn’t add time sensitive metadata. Maybe # following = https://domgoergen.com/twtxt/mdom.txt https://enotty.dk/twtxt.txt …

Maybe we shouldn’t add time sensitive metadata. Maybe # following = https://domgoergen.com/twtxt/mdom.txt https://enotty.dk/twtxt.txt …

@kas@enotty.dk, @benaiah@benaiah.me Should metadata always be at the start of the file or can it be interspersed with tweets?

@kas@enotty.dk, @benaiah@benaiah.me Should metadata always be at the start of the file or can it be interspersed with tweets?

@mdom@domgoergen.com, @kas@enotty.dk re: metadata, I’m (obviously) in favor of my suggestion for metadata-in-comments, but I don’t think we should have comments in comments.

@kas@enotty.dk The amount of whitespace around the equal sign shouldn’t matter. Wouldn’t be a comment above the line not enough? <# nick = mdom # my nick> looks weird.

@kas@enotty.dk The amount of whitespace around the equal sign shouldn’t matter. Wouldn’t be a comment above the line not enough? <# nick = mdom # my nick> looks weird.