Let’s hope that the two cakes turn out better than last week: https://lyse.isobeef.org/tmp/tote-und-lebendige-kuchen-2025-12-02.jpg Got some gingerbread as backup. Yeah, best lighting…

** Encrypt & Decrypt Database Fields in Spring Boot Like a Pro (2025 Secure Guide)**

“Your database backup just leaked. Is your data still safe?”

[Continue reading on InfoSec Write-ups »](https://infos … ⌘ Read more

NIRS fire destroys [South Korean] government’s cloud storage system, no backups available

Comments ⌘ Read more

DietPi September 2025 Update Brings Faster Backups and Roon Server Early Access

The September 20th release of DietPi v9.17 introduces smaller and more efficient system images, faster backups with reduced disk usage, and a new toggle for Roon Server’s early access builds. The update also addresses SPI bootloader flashing issues on Rockchip devices, improves Raspberry Pi sound card handling, and includes multiple bug fixes across tools and […] ⌘ Read more

There’s always something more urgent: I’ve been known for a long time that sooner or later I’d feel prompted to switch from #github to somewhere else (since 2018 at least!), but I’ve been postponing and only very slowly flirting with the idea… That didn’t work too bad for me: if I had rushed into it I would have probably migrated to #gitlab, before knowing about the more objectionable sides to it. In the end, 2025 was the year I finally acted upon the urge to move. I did not do a very thorough analysis of the alternative hosts - what I have been reading about them along the years felt enough, and I easily decided to choose #codeberg. Being hasty like that, alas, was a mistake: I just now found - during this slow and time-consuming process of deciding what and how to migrate - that there is a low repository limit on codeberg: “The owner has already reached the limit of 100 repositories.” I’m not complaining, mind you, and those “lucky 100” that are already there will stay - at least as a sort of backup. But this means that codeberg is not for me - and so this time I turn to you, the #mastodon community.

What github alternative, not self-hosted, should I move my >100 projects into?

@movq@www.uninformativ.de WE NEED MORE BACKUPS!!!!!!!!!!!1

@kat@yarn.girlonthemoon.xyz Oh no. 😨 Backups! We need more backups!

linode’s having a major outage (ongoing as of writing, over 24 hours in) and my friend runs a site i help out with on one of their servers. we didn’t have recent backups so i got really anxious about possible severe data loss considering the situation with linode doesn’t look great (it seems like a really bad incident).

…anyway the server magically came back online and i got backups of the whole application and database, i’m so relieved :‘)

How Backups Can Break End-to-End Encryption (E2EE) ⌘ Read more

Running monthly backups…

Home Assistant 2025.5 released

Version\

2025.5 of the Home Assistant home automation system has been released.

With this release, the project is celebrating two million active

installations. Changes include improvements to the backup system, Z-Wave

Long Range support, a number of new integrations, and more. ⌘ Read more

How to build a fleet of networked offsite backups using Linux, WireGuard and rsync

Comments ⌘ Read more

@xuu@txt.sour.is or @kat@yarn.girlonthemoon.xyz Do either of you have time this weekend to test upgrading your pod to the new cacher branch? 🤔 It is recommended you take a full backup of you pod beforehand, just in case. Keen to get this branch merged and to cut a new release finally after >2 years 🤣

@bender@twtxt.net Lemme look at the old backup…

@prologic@twtxt.net @bmallred@staystrong.run So is restic considered stable by now? “Stable” as in “stable data format”, like a future version will still be able to retrieve my current backups. I mean, it’s at version “0.18”, but they don’t specify which versioning scheme they use.

I use restic and Backblaze B2 for offline backup storage at a cost of $6/TB/month. I don’t backup my entire ~20TB NAS and its datasets however, so I’m only paying about ~$2/month right now. I only backup the most important things I cannot afford to lose or annot re-created.

(#7n4klda) I use restic and Backblaze B2 for offline backup storage at a cost of $6/TB/month. I don’t backup my entire ~20TB NAS and its dataset …

I use restic and Backblaze B2 for offline backup storage at a cost of $6/TB/month. I don’t backup my entire ~20TB NAS and its datasets however, so I’m only paying about ~$2/month right now. I only backup the most important things I cannot afford to lose or annot re-created. ⌘ Read more

@movq@www.uninformativ.de there are many other similar backup tools. I would love to hear what will make you pick Borg above the rest.

On top of my usual backups (which are already offsite, but it requires me carrying a hard disk to that other site), I think I might rent a storage server and use Borg. 🤔 Hoping that their encryption is good enough. Maybe that’ll also finally convince me to get a faster internet connection. 😂

Add support for skipping backup if data is unchagned · 0cf9514e9e - backup-docker-volumes - Mills 👈 I just discovered today, when running backups, that this commit is why my backups stopped working for the last 4 months. It wasn’t that I was forgetting to do them every month, I broke the fucking tool 🤣 Fuck 🤦♂️

Add support for skipping backup if data is unchagned · 0cf9514e9e - backup-docker-volumes - Mills 👈 I just discovered today, when running ba …

Add support for skipping backup if data is unchagned · 0cf9514e9e - backup-docker-volumes - Mills 👈 I just discovered today, when running backups, that this commit is why my backups stopped working for the last 4 months. It wasn’t that I was forgetting to do them every month, I broke the fuckin … ⌘ Read more

@thecanine@twtxt.net I mean I can restore whatever anyone likes, the problem is the last backup I took was 4 months ago 😭 So I decided to start over (from scratch). Just let me know what you want and I’ll do it! I used the 4-month old backup to restore your account (by hand) and avatar at least 🤣

(#phvll7a) @thecanine@thecanine I mean I can restore whatever anyone likes, the problem is the last backup I took was 4 months ago 😭 So I dec …

@thecanine @twtxt.net I mean I can restore whatever anyone likes, the problem is the last backup I took was 4 months ago 😭 So I decided to start over ( from scratch). Just let me know what you want and I’ll do it! I used the 4-month old backup to restore your account ( by hand) and avatar at least 🤣 ⌘ Read more

@thecanine@twtxt.net I’m so sorry I fucked things up 🥲 I hope you can trust I’ll try to do a better job of backups and data going forward 🤗

(#jmekihq) @thecanine@thecanine I’m so sorry I fucked things up 🥲 I hope you can trust I’ll try to do a better job of backups and data going …

@thecanine @twtxt.net I’m so sorry I fucked things up 🥲 I hope you can trust I’ll try to do a better job of backups and data going forward 🤗 ⌘ Read more

This weekend (as some of you may now) I accidently nuke this Pod’s entire data volume 🤦♂️ What a disastrous incident 🤣 I decided instead of trying to restore from a 4-month old backup (we’ll get into why I hadn’t been taking backups consistently later), that we’d start a fresh! 😅 Spring clean! 🧼 – Anyway… One of the things I realised was I was missing a very critical Safety Controls in my own ways of working… I’ve now rectified this…

This weekend (as some of you may now) I accidently nuke this Pod’s entire data volume 🤦♂️ What a disastrous incident 🤣 I decided …

This weekend ( as some of you may now) I accidently nuke this Pod’s entire data volume 🤦♂️ What a disastrous incident 🤣 I decided instead of trying to restore from a 4-month old backup ( we’ll get into why I hadn’t been taking backups consistently later), that we’d start a fresh! 😅 Spring clean! 🧼 – Anyway… One of the things I realised was I was missing a very critical Safety Controls in my own … ⌘ Read more

@prologic@twtxt.net Spring cleanup! That’s one way to encourage people to self-host their feeds. :-D

Since I’m only interested in the url metadata field for hashing, I do not keep any comments or metadata for that matter, just the messages themselves. The last time I fetched was probably some time yesterday evening (UTC+2). I cannot tell exactly, because the recorded last fetch timestamp has been overridden with today’s by now.

I dumped my new SQLite cache into: https://lyse.isobeef.org/tmp/backup.tar.gz This time maybe even correctly, if you’re lucky. I’m not entirely sure. It took me a few attempts (date and time were separated by space instead of T at first, I normalized offsets +00:00 to Z as yarnd does and converted newlines back to U+2028). At least now the simple cross check with the Twtxt Feed Validator does not yield any problems.

Oh well. I’ve gone and done it again! This time I’ve lost 4 months of data because for some reason I’ve been busy and haven’t been taking backups of all the things I should be?! 🤔 Farrrrk 🤬

Oh well. I’ve gone and done it again! This time I’ve lost 4 months of data because for some reason I’ve been busy and haven’t been taking backup …

Oh well. I’ve gone and done it again! This time I’ve lost 4 months of data because for some reason I’ve been busy and haven’t been taking backups of all the things I should be?! 🤔 Farrrrk 🤬 ⌘ Read more

@prologic@twtxt.net If it develops, and I’m not saying it will happen soon, perhaps Yarn could be connected as an additional node. Implementation would not be difficult for any client or software. It will not only be a backup of twtxt, but it will be the source for search, discovery and network health.

Data Protection Working Group Deep Dive Session at KubeCon + CloudNativeCon London

Data on Kubernetes is a growing field, with databases, object stores, and other stateful applications moving to the platform. The Data Protection Working Group focuses on data availability and preservation for Kubernetes – including backup, restore,… ⌘ Read more

UK Authorities Demand Back Door Access to iCloud Backups Globally

The British government has demanded that Apple give it blanket access to all user content uploaded to the cloud, reports The Washington Post.

The undisclosed order is said to have been issued last month, and requires that Apple creates a back door that allo … ⌘ Read more

i upgraded my pc from lubuntu 22.04 to 24.04 yesterday and i was like “surely there is no way this will go smoothly” but no it somehow did. like i didn’t take a backup i just said fuck it and upgraded and it WORKED?!?! i mean i had some driver issues but it wasn’t too bad to fix. wild

Super Watchdog Raspberry Pi HAT with Battery Backup Gains Multi-Chemistry Support

The Super Watchdog HAT with UPS Battery Backup provides power management and reliability for mission-critical Raspberry Pi applications. It supports all Raspberry Pi models, ensuring uninterrupted operation, data protection during outages, and system monitoring. The latest release for this HAT introduces multi-chemistry support, allowing compatibility with a range of 18650 battery … ⌘ Read more

A random suggestion. You should add a password to your private ssh key. Why? If someone steals your key, they won’t be able to do anything without the password.

You should run: ssh-keygen -p

And remember to make a backup copy of key file. As a developer, it is a one of the most valuable files on your computer.

So… Been a while since I’ve done this… But on macOS the best way to rip DVD(s) now is to 1) Use MakeMKV to backup the DVD disk and decrypt i …

So… Been a while since I’ve done this… But on macOS the best way to rip DVD(s) now is to 1) Use MakeMKV to backup the DVD disk and decrypt it 2) Use Handbrake to re-encode the backed up DVD disk into something more reasonable 3) Put it on a NAS or Media Server. ⌘ Read more

@emmanuel@wald.ovh Btw I already figured out why accessing your web server is slow:

$ host wald.ovh

wald.ovh has address 86.243.228.45

wald.ovh has address 90.19.202.229

wald.ovh has 2 IPv4 addresses, one of which is dead and doesn’t respond.. That’s why accessing your website is so slow as depending on client and browser behaviors one of two things may happen 1) a random IP is chosen and ½ the time the wrong one is picked or 2) both are tried in some random order and ½ the time its slow because the broken one is picked.

If you don’t know what 86.243.228.45 is, or it’s a dead backup server or something, I’d suggest you remove this from the domain record.

(#34st2yq) @bender@bender Haha just making sure when I’m removing snapshots from my backup that I don’t remove the wrong ones 🤣

@bender Haha just making sure when I’m removing snapshots from my backup that I don’t remove the wrong ones 🤣 ⌘ Read more

iCloud Backups No Longer Available for iPhones and iPads Running iOS 8 or Earlier

Making a device backup over iCloud now requires iOS 9 or later, which means iPhones and iPads that are running iOS 8 or earlier are no longer able to be backed up using iCloud.

Apple [announced the change in November](https://www.macrumors.com/2024/11/18/apple-icloud-backup-ios-9-min … ⌘ Read more

PSA: macOS Sequoia 15.2 Breaks SuperDuper Bootable Backups

Apple’s latest macOS Sequoia 15.2 update has introduced a critical bug that prevents the popular backup utility SuperDuper from creating bootable backups, according to the app’s chief developer, Shirt Pocket’s Dave Ninian.

4, various improvements, updates, and bug fixes.

”`

Support USDT (ERC20 & TRC20)

Refactor tabs for simplicity

Update Tails script to retry download using wget

Improve backup recovery if wallet cache is corrupt

Fix sorting Buy or Sell XMR > Amount column

Update price nodes to support USDT

Other stability improvements and bug fixes [..]

... ⌘ [Read more](https://monero.observer/haveno-v1.0.13-released-support-tether-usdt/)“

Backup and recovery for Vector Databases on Kubernetes using Kanister

Community post by Pavan Navarathna Devaraj and Shwetha Subramanian AI is an exciting, rapidly evolving world that has the potential to enhance every major enterprise application. It can enhance cloud-native applications through dynamic scaling, predictive maintenance,… ⌘ Read more

Spotify Launches Offline Backup Feature for Premium Users

Spotify has announced a new feature called Offline Backup, designed to give Premium subscribers an additional way to listen to music offline without manually downloading playlists. Rolling out globally this week, the feature aims to address situations where users find themselves without an internet connection and haven’t … ⌘ Read more

Can I get someone like maybe @xuu@txt.sour.is or @abucci@anthony.buc.ci or even @eldersnake@we.loveprivacy.club – If you have some spare time – to test this yarnd PR that upgrades the Bitcask dependency for its internal database to v2? 🙏

VERY IMPORTANT If you do; Please Please Please backup your yarn.db database first! 😅 Heaven knows I don’t want to be responsible for fucking up a production database here or there 🤣

@prologic@twtxt.net earlier you suggested extending hashes to 11 characters, but here’s an argument that they should be even longer than that.

Imagine I found this twt one day at https://example.com/twtxt.txt :

2024-09-14T22:00Z Useful backup command: rsync -a “$HOME” /mnt/backup

and I responded with “(#5dgoirqemeq) Thanks for the tip!”. Then I’ve endorsed the twt, but it could latter get changed to

2024-09-14T22:00Z Useful backup command: rm -rf /some_important_directory

which also has an 11-character base32 hash of 5dgoirqemeq. (I’m using the existing hashing method with https://example.com/twtxt.txt as the feed url, but I’m taking 11 characters instead of 7 from the end of the base32 encoding.)

That’s what I meant by “spoofing” in an earlier twt.

I don’t know if preventing this sort of attack should be a goal, but if it is, the number of bits in the hash should be at least two times log2(number of attempts we want to defend against), where the “two times” is because of the birthday paradox.

Side note: current hashes always end with “a” or “q”, which is a bit wasteful. Maybe we should take the first N characters of the base32 encoding instead of the last N.

Code I used for the above example: https://fossil.falsifian.org/misc/file?name=src/twt_collision/find_collision.c

I only needed to compute 43394987 hashes to find it.

@prologic@twtxt.net How does yarn.social’s API fix the problem of centralization? I still need to know whose API to use.

Say I see a twt beginning (#hash) and I want to look up the start of the thread. Is the idea that if that twt is hosted by a a yarn.social pod, it is likely to know the thread start, so I should query that particular pod for the hash? But what if no yarn.social pods are involved?

The community seems small enough that a registry server should be able to keep up, and I can have a couple of others as backups. Or I could crawl the list of feeds followed by whoever emitted the twt that prompted my query.

I have successfully used registry servers a little bit, e.g. to find a feed that mentioned a tag I was interested in. Was even thinking of making my own, if I get bored of my too many other projects :-)

Raspberry Pi Pico RP2040-Powered FlippyDrive: An Optical Disc Drive Emulator for GameCube

CrowdSupply recently announced the FlippyDrive campaign, described as an open-source optical disc drive emulator for the GameCube console designed to install without soldering. This product allows users to maintain their physical disc drive functionality while offering additional options for running backups and homebrew software. FlippyDrive operates using the Raspbe … ⌘ Read more

@prologic@twtxt.net I did that, and it returns no error.

`user@server:~/backup/yarn$ make deps

user@server:~/backup/yarn$ make server

/bin/sh: 4: minify: not found

/bin/sh: 5: minify: not found

/bin/sh: 6: minify: not found

make: *** [Makefile:84: generate] Error 127

`

How close are we to chaos? It turns out, just one blue screen of death

Tech meltdowns like CrowdStrike look like the new normal, and we will need to prepare better backup plans, such as cash. ⌘ Read more

Docker Desktop 4.32: Beta Releases of Compose File Viewer, Terminal Shell Integration, and Volume Backups to Cloud Providers

Discover the powerful new features in Docker Desktop 4.32, including the Compose File Viewer, terminal integration, and enterprise-grade volume backups, designed to enhance developer productivity and streamline workflows. ⌘ Read more

Home Server Offline ☹️

Today, I woke up and noticed that my home server, located in my second flat, and also the router, all behind a 5G connection (that was showing as working fine on the provider’s website), were offline. No VPN connection anymore, and also Tailscale showed the nodes as being offline. I’m glad that I had automatic backups and was able to easily restore the three important services from that server on my VPS, without the need to travel to the second flat first. ⌘ Read more

QNAP Launches TS-216G: A 2-Bay 2.5GbE NAS System for Efficient Management and Rapid Backup Solutions

This month, QNAP Systems, Inc. rolled out the TS-216G NAS, a dynamic 2-bay network attached storage (NAS) system, optimized for both individual and workgroup use. Featuring a 2.5GbE port and hot-swappable capability, it promises efficient data management and enhanced reliability. At the core of the TS-216G is a 64-bit Arm quad-core processor, co … ⌘ Read more

@mckinley@twtxt.net for me:

- a wall mount 6U rack which has:

- 1U patch panel

- 1U switch

- 2U UPS

- 1U server, intel atom 4G ram, debian (used to be main. now just has prometheus)

- 1U patch panel

- a mini ryzon 16 core 64G ram, fedora (new main)

- multiple docker services hosted.

- multiple docker services hosted.

- synology nas with 4 2TB drives

- turris omnia WRT router -> fiber uplink

network is a mix of wireguard, zerotier.

- wireguard to my external vms hosted in various global regions.

- this allows me ingress since my ISP has me behind CG-NAT

- this allows me ingress since my ISP has me behind CG-NAT

- zerotier is more for devices for transparent vpn into my network

i use ssh and remote desktop to get in and about. typically via zerotier vpn. I have one of my VMs with ssh on a backup port for break glass to get back into the network if needed.

everything has ipv6 though my ISP does not provide it. I have to tunnel it in from my VMs.

@mckinley@twtxt.net for me:

- a wall mount 6U rack which has:

- 1U patch panel

- 1U switch

- 2U UPS

- 1U server, intel atom 4G ram, debian (used to be main. now just has prometheus)

- 1U patch panel

- a mini ryzon 16 core 64G ram, fedora (new main)

- multiple docker services hosted.

- multiple docker services hosted.

- synology nas with 4 2TB drives

- turris omnia WRT router -> fiber uplink

network is a mix of wireguard, zerotier.

- wireguard to my external vms hosted in various global regions.

- this allows me ingress since my ISP has me behind CG-NAT

- this allows me ingress since my ISP has me behind CG-NAT

- zerotier is more for devices for transparent vpn into my network

i use ssh and remote desktop to get in and about. typically via zerotier vpn. I have one of my VMs with ssh on a backup port for break glass to get back into the network if needed.

everything has ipv6 though my ISP does not provide it. I have to tunnel it in from my VMs.

Tuesday, February 27 | Top stories | From the Newsroom

Malcolm Turnbull criticises Donald Trump’s ties to Vladimir Putin, NSW Police uninvited from Mardi Gras, Cristiano Ronaldo faces suspension for inappropriate gesture, and Taylor Swift’s Australian tour concludes with her backup dancer delighting the crowd with Aussie slang. ⌘ Read more

MacOS Sonoma 14.3.1 Update Fixes Text Overlap Bug on Macs

iOS 17.3.1 and iPadOS 17.3.1, which fixes the same bug on iPhone and iPad, and watchOS 10.3.1, which resolves the bug on Apple Watch. How to Download & Install MacOS Sonoma 14.3.1 Update Be sure you backup the Mac to Time Machine before beginning any software update. Go to the Apple menu Choose “System … Read More ⌘ Read more

How to Boot Into MacOS Recovery in UTM on Apple Silicon Mac

If you’ve installed macOS Sonoma into a UTM virtual machine you may get into a situation where you’d either like to restore the VM from a Time Machine backup, or even reinstall Sonoma in the VM, or perform other actions on the virtual machine from Recovery Mode. But, as you likely have noticed by now, … Read More ⌘ Read more

Using GitHub Actions to backup OneDrive to S3

My ideals of using open-source software are fading a bit, and I’ve been using OneDrive to synchronize my files for quite some time now. It’s cheap and works reliable at least. ⌘ Read more

Some iPhones Are Restarting or Turning Off Randomly at Night

Some iPhone users are discovering their iPhone has either randomly rebooted, or turned itself off, usually while plugged in overnight. Perhaps worst of all, when the iPhone starts back up, any set alarm may not go off, which has led some people to oversleep or to have to rely on a backup alarm. While not … Read More ⌘ Read more

Is Mars a Good Backup Plan? ⌘ Read more

I’ve made smart and added a backup copy of my twtxt file to Storj cos my internet keeps on dying

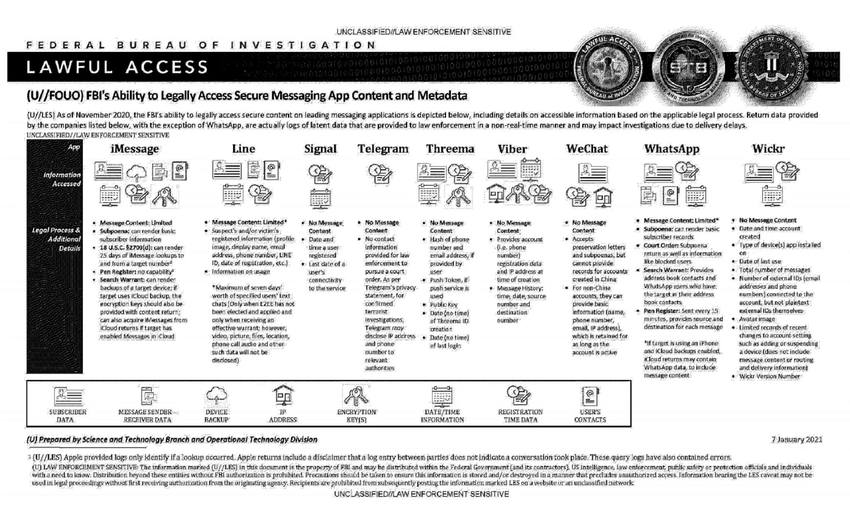

An official FBI document dated January 2021, obtained by the American association “Property of People” through the Freedom of Information Act.

This document summarizes the possibilities for legal access to data from nine instant messaging services: iMessage, Line, Signal, Telegram, Threema, Viber, WeChat, WhatsApp and Wickr. For each software, different judicial methods are explored, such as subpoena, search warrant, active collection of communications metadata (“Pen Register”) or connection data retention law (“18 USC§2703”). Here, in essence, is the information the FBI says it can retrieve:

Apple iMessage: basic subscriber data; in the case of an iPhone user, investigators may be able to get their hands on message content if the user uses iCloud to synchronize iMessage messages or to back up data on their phone.

Line: account data (image, username, e-mail address, phone number, Line ID, creation date, usage data, etc.); if the user has not activated end-to-end encryption, investigators can retrieve the texts of exchanges over a seven-day period, but not other data (audio, video, images, location).

Signal: date and time of account creation and date of last connection.

Telegram: IP address and phone number for investigations into confirmed terrorists, otherwise nothing.

Threema: cryptographic fingerprint of phone number and e-mail address, push service tokens if used, public key, account creation date, last connection date.

Viber: account data and IP address used to create the account; investigators can also access message history (date, time, source, destination).

WeChat: basic data such as name, phone number, e-mail and IP address, but only for non-Chinese users.

WhatsApp: the targeted person’s basic data, address book and contacts who have the targeted person in their address book; it is possible to collect message metadata in real time (“Pen Register”); message content can be retrieved via iCloud backups.

Wickr: Date and time of account creation, types of terminal on which the application is installed, date of last connection, number of messages exchanged, external identifiers associated with the account (e-mail addresses, telephone numbers), avatar image, data linked to adding or deleting.

TL;DR Signal is the messaging system that provides the least information to investigators.

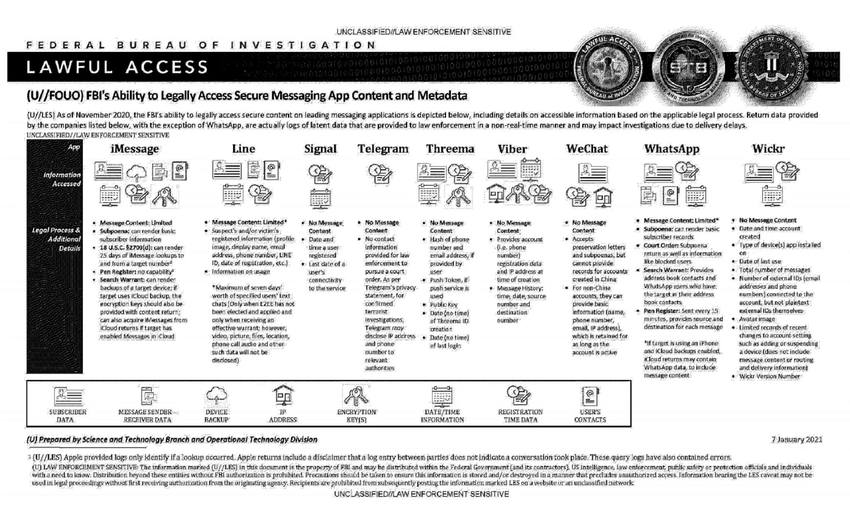

An official FBI document dated January 2021, obtained by the American association “Property of People” through the Freedom of Information Act.

This document summarizes the possibilities for legal access to data from nine instant messaging services: iMessage, Line, Signal, Telegram, Threema, Viber, WeChat, WhatsApp and Wickr. For each software, different judicial methods are explored, such as subpoena, search warrant, active collection of communications metadata (“Pen Register”) or connection data retention law (“18 USC§2703”). Here, in essence, is the information the FBI says it can retrieve:

Apple iMessage: basic subscriber data; in the case of an iPhone user, investigators may be able to get their hands on message content if the user uses iCloud to synchronize iMessage messages or to back up data on their phone.

Line: account data (image, username, e-mail address, phone number, Line ID, creation date, usage data, etc.); if the user has not activated end-to-end encryption, investigators can retrieve the texts of exchanges over a seven-day period, but not other data (audio, video, images, location).

Signal: date and time of account creation and date of last connection.

Telegram: IP address and phone number for investigations into confirmed terrorists, otherwise nothing.

Threema: cryptographic fingerprint of phone number and e-mail address, push service tokens if used, public key, account creation date, last connection date.

Viber: account data and IP address used to create the account; investigators can also access message history (date, time, source, destination).

WeChat: basic data such as name, phone number, e-mail and IP address, but only for non-Chinese users.

WhatsApp: the targeted person’s basic data, address book and contacts who have the targeted person in their address book; it is possible to collect message metadata in real time (“Pen Register”); message content can be retrieved via iCloud backups.

Wickr: Date and time of account creation, types of terminal on which the application is installed, date of last connection, number of messages exchanged, external identifiers associated with the account (e-mail addresses, telephone numbers), avatar image, data linked to adding or deleting.

TL;DR Signal is the messaging system that provides the least information to investigators.

@mckinley@twtxt.net I use spideroak backup, and switched from selfhosted nextcloud to proton drive.

Follow-up question for you guys: Where do you backup your files to? Anything besides the local NAS?

@mckinley@twtxt.net ninja backup and Borg

@mckinley@twtxt.net ninja backup and Borg

@mckinley@twtxt.net Yeah, that’s more clear. 👌

Systems that are on all the time don’t benefit as much from at-rest encryption, anyway.

Right, especially not if it’s “cloud storage”. 😅 (We’re only doing it on our backup servers, which are “real” hardware.)

My backup power for internet and raspis is good for a couple hours.

@jlj@twt.nfld.uk Very grateful for the 5€/month donation, it’s paying my backup server Intel ATOM N2800 Kimsufi dedicated server. It’s running DragonflyBSD! :D

Digital Prepping, Part 3 - Backups, Storage, and EMPs

Planning your data storage, safeguarding it from disaster, and doing the same for your electronics. ⌘ Read more

No Backup: The demise of physical media

From “Backup Floppies” to “No Physical Media” in 40 years. ⌘ Read more

restic · Backups done right! – In case no-one has used this wonderful tool restic yet, I can beyond a doubt assure you it is really quite fantastic 👌 #backups

I’ll see if I have time tonight, I’ll take a backup of everything, then test a bit. I’ll let you know if I get stuck on anything. thank you for asking :)

September Extensions Roundup: Test APIs, Use Oracle SQLcl, and More

Find out what’s new this month in the Docker Extension Marketplace! Access InterSystems, test APIs, use Oracle SQLcl, and backup/share volumes — right from Docker Desktop. ⌘ Read more

Back Up and Share Docker Volumes with This Extension

You can now back up volumes with the new Volumes Backup & Share extension. Find out how it works in this post! ⌘ Read more

LundukeFest Part 2 scheduling update!

Bringing in some backup! ⌘ Read more

The battery life in this i9 MacBook Pro from 2018 has diminished to being barely enough to serve as an UPS backup system with enough time to perform a safe shutdown

Isode: Successfully Managing HF Radio Networks

With the potential for new technologies to cause interference to traditional communications networks and even space itself at the risk of becoming weaponised, it is important to make sure that you always have a backup plan for your communications ready and waiting.

Should the worst happen and your primary network, typically SatCom, go down you need to ensure that you can still communicate with your forces wherever they are, and that c … ⌘ Read more

My home and code server now has 2 TB of SSD storage and 16 GB of RAM. While I’ll be using the storage for backups, etc., I’m not quite sure what I can use the 16 GB of RAM for yet. What else can I run besides Home Assistant, AdGuard Home, Drone and Tailscale? I still have my VPS running my websites, Miniflux, Bitwarden, Firefox Sync Server, RSS-Bridge, Firefly III, Nitter and Gitea. 🤔 ⌘ Read more

Getting started with Restic for my backup needs. It’s really neat and fast.

@lucididiot@tilde.town thank you for the backup! i agree that wiki is in dire need of some maintenance but that hardly seems like a reason to just up and delete it…

Lost my recent links collection from links.oevl.info. MAKE FUCKING BACKUPS #ShitHappens

Lost my recent links collection from links.oevl.info. MAKE FUCKING BACKUPS #ShitHappens

I get slightly nervous when my backup program does full uploads of FLAC files that shouldn’t have changed at all and should only be a checksum match

Soooo. My whole public_html folder is gone. twtxt as well. Hope I can find a backup somewhere

Saved Game History: Battery Backups, Memory Cards, and the Cloud https://tedium.co/2019/02/21/video-game-save-state-history/

How to Backup Your Allo Conversations Before Google Shuts it Down https://lifehacker.com/how-to-backup-your-allo-conversations-before-google-shu-1830922541

Somehow, http://www.lord-enki.net/medium-backup/2016-09-01_A-Qualified-Defense-of-Jargon—Other-In-Group-Signifiers-2fe2cd37b66b.html is getting a lot of hate. I thought it was pretty even-handed. Do people dislike it because I said culture fit should only matter when it impacts effectiveness, or that it’s useful at all?

In case anybody cares, I’ve fixed up my mirror of all my medium posts. (Useful if you are a cheapskate!) As always, I prefer people with a medium account to actually go there & clap so I get a dime. http://www.lord-enki.net/medium-backup/