@doesnm@doesnm.p.psf.lt never!

@bender@twtxt.net hmm.. indeed.

@bender@twtxt.net the EF this feed is muted. Why is yarn busted? 😁 @lyse@lyse.isobeef.org @prologic@twtxt.net

Your TOTP value has been accepted

@bender@twtxt.net So turns out something is setting my HashingURI to the value {{ .Profile.URI }} and that is making my hashes wrong so it cannot delete or edit twts.

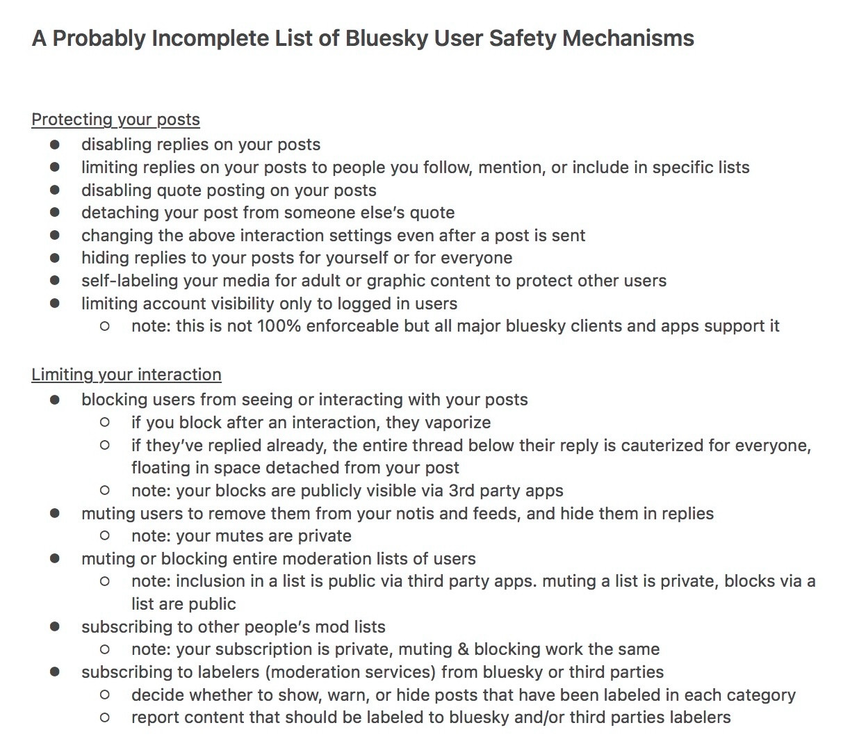

Interesting list of features to protect users and communities on bluesky. I wonder if any make sense in text context.

Nope. 😐

Can I edit this twt?

Why is the rooted post after the replied post?? time shenanigans?

Oh. so i can see it now that i trust your pod.

@prologic@twtxt.net just rebuild my image.. though git says i am already at latest

Lol. “Lighty Encrypted” https://www.pcmag.com/news/hot-topic-breach-confirmed-millions-of-credit-cards-email-addresses-exposed

@prologic@twtxt.net I never got the root for this

@wbknl@twtxt.net I have thought of getting one. I wish there were easier tools for it than direwolf

🤑😜

@bender@twtxt.net Linux and Android. I would never iOS my friend.

@lyse@lyse.isobeef.org agree on the HTTP stuff. I mean we could mention that for optimization see RFC yadda yadda should be followed for caching. but not have it part of the spec proper.

@eapl.me@eapl.me Neat.

So for twt metadata the lextwt parser currently supports values in the form [key=value]

https://git.mills.io/yarnsocial/go-lextwt/src/branch/main/parser_test.go#L692-L698

@sorenpeter@darch.dk on 4 for gemini if your TLS client certificate contains your nick@host could that work for discovery?

@bender@twtxt.net they revel in their blindness. Roll within their stink.

I am so sorry.

@quark@ferengi.one Yeah i’m in deep red here. the governor race is getting split between a red and a maga that is running a write in.. but even if they split the vote 50-50% they will still be greater than what the blue will get.

Unfortunately the US media has been making it a nail biter on purpose when in reality it is not. Get out and vote in numbers that cannot be denied. And then get everyone else around you to vote also.

Maybe one day enough states will make it into the NaPo InterCo to finally put the EC to rest.

Spent some time cleaning up my AoC code to get ready for December 1st. Anyone else doing it this year? @prologic@twtxt.net we have to setup a new team each year?

@prologic@twtxt.net yeah short Nick is going to be unique enough. There is always olong Nick that adds the domain for differentiation.

@sorenpeter@darch.dk I run Weechat headless on a VM and mostly connect via mobile or dwsktop. I use the android client or gliwing bear. Work blocks all comms on their always on MitM VPN so I cant in office anymore. So I just use mobile.

@prologic@twtxt.net that should be right

This is pretty neat! An IP KVM that doesn’t cost a gagillion dollars. I might just back it. https://www.kickstarter.com/projects/jetkvm/jetkvm

Well poop. Covid coming to visit for a second time.

@prologic@twtxt.net currently? it wouldnt :D.

we would need to come up with a way of registering with multiple brokers that can i guess forward to a reader broker. something that will retry if needed. need to read into how simplex handles multi brokers

Yes a redirect to my profile uri. because its crazy long and ugly

@doesnm@doesnm.p.psf.lt Agree. salty.im should allow the user to post multiple brokers on their webfinger so the client can find a working path.

I am reminded of this when I look at entire forks of vscode just to add a LLM code completion assistant.

Yeah.. it is very similar to salty.im a smp is a relay queue for messages. You can self host one if you choose. They also have something called xftp for data storage and device state transfer. You can also self host one.

Is anyone here on simplex? https://sour.is/simplex

Same! Great joke!

@movq@www.uninformativ.de i’m sorry if I sound too contrarian. I’m not a fan of using an obscure hash as well. The problem is that of future and backward compatibility. If we change to sha256 or another we don’t just need to support sha256. But need to now support both sha256 AND blake2b. Or we devide the community. Users of some clients will still use the old algorithm and get left behind.

Really we should all think hard about how changes will break things and if those breakages are acceptable.

I share I did write up an algorithm for it at some point I think it is lost in a git comment someplace. I’ll put together a pseudo/go code this week.

Super simple:

Making a reply:

- If yarn has one use that. (Maybe do collision check?)

- Make hash of twt raw no truncation.

- Check local cache for shortest without collision

- in SQL:

select len(subject) where head_full_hash like subject || '%'

- in SQL:

Threading:

- Get full hash of head twt

- Search for twts

- in SQL:

head_full_hash like subject || '%' and created_on > head_timestamp

- in SQL:

The assumption being replies will be for the most recent head. If replying to an older one it will use a longer hash.

here are plenty of implementations https://www.blake2.net/#su

I mean sure if i want to run it over on my tooth brush why not use something that is accessible everywhere like md5? crc32? It was chosen a long while back and the only benefit in changing now is “i cant find an implementation for x” when the down side is it breaks all existing threads. so…

These collisions aren’t important unless someone tries to fork. So.. for the vast majority its not a big deal. Using the grow hash algorithm could inform the client to add another char when they fork.

People stranded on the roof of a hospital in Tennessee after hurricane Helene

Wild flooding in Ashville, NC due to Hurricane Helene