@bender@twtxt.net Sorry, trust was the wrong word. Trust as in, you do not have to check with anything or anyone that the hash is valid. You can verify the hash is valid by recomputing the hash from the content of what it points to, etc.

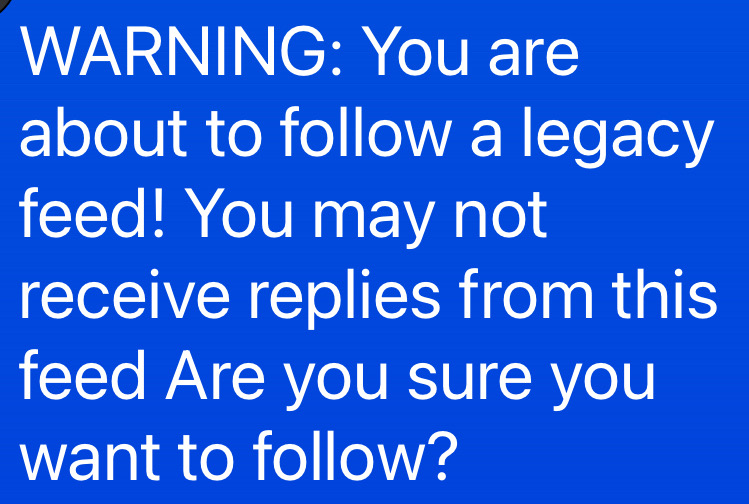

Anyone had any intereractions with @cuaxolotl@sunshinegardens.org yet? Or are they using a client that doesn’t know how to detect clients following them properly? Hmmm 🧐

It’s a really good time to invest in nVIDIA shares 🤣

@lyse@lyse.isobeef.org errors are already reported to users, but they’re only visible in the following list.

Does anyone know what the differences between HTTP/1.1 HTTP/2 and HTTP/3 are? 🤔

@falsifian@www.falsifian.org by the way, on the last Saturday of every month, we generally hold a online video call/social meet up, where we just get together and talk about stuff if, you’re interested in joining us this month.

@falsifian@www.falsifian.org You need an Avatar 😅

It’s also (expectedly) in the feed file on disk:

2024-08-04T21:22:05+10:00 [foo][foo=][foo][foo=]

@bender@twtxt.net / @mckinley@twtxt.net could you both please change your password immediately? I will also work on some other security hardening that I have a hunch about, but will not publicize for now.

A equivalent yarnc debug <url> only sees the 2nd hash

@aelaraji@aelaraji.com Ahh it might very well be a Clownflare thing as @lyse@lyse.isobeef.org eluded to 🤣 One of these days I’m going to get off Clownflare myself, when I do I’ll share it with you. My idea is to basically have a cheap VPS like @eldersnake@we.loveprivacy.club has and use Wireguard to tunnel out. The VPS becomes the Reverse Proxy that faces the internet. My home network then has in inbound whatsoever.

@lyse@lyse.isobeef.org Ahh so it’s not just me! 😅

Hmmm I’m a little concerned, as I’m seeing quite a few feeds I follow in an error state:

I’m not so concerned with the 15x context deadline exceeded but more concerned with:

aelaraji@aelaraji.com Unfollow (6 twts, Last fetched 5m ago with error:

dead feed: 403 Forbidden

x4 times.)

And:

anth@a.9srv.net Unfollow (1 twts, Last fetched 5m ago with error:

Get "http://a.9srv.net/tw.txt": dial tcp 144.202.19.161:80: connect: connection refused

x3733 times.)

Hmmm, maybe the stats are a bit off? 🤔

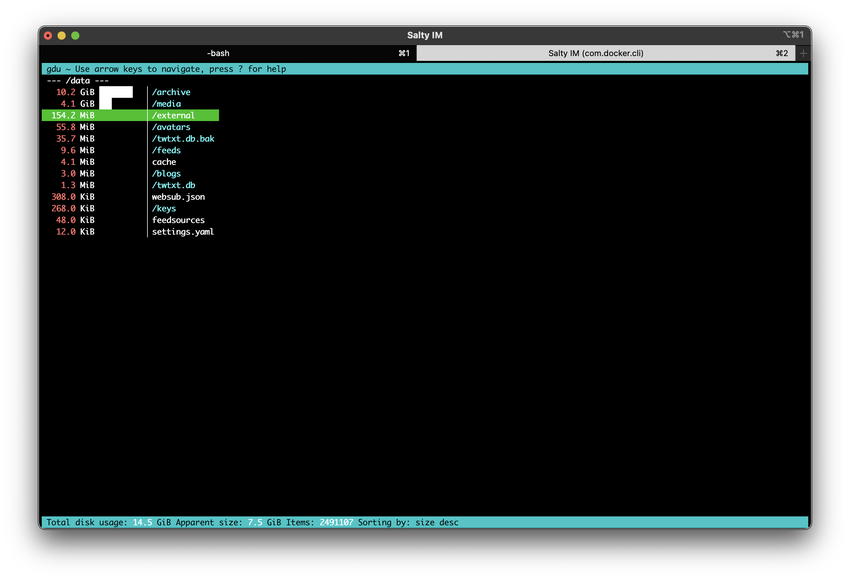

Anyway, I’m gonna have to go to bed… We’ll continue this on the weekend. Still trying to hunt down some kind of suspected mult-GB avatar using @stigatle@yarn.stigatle.no ’s pod’s cache:

$ (echo "URL Bytes"; sort -n -k 2 -r < avatars.txt | head) | column -t

URL Bytes

https://birkbak.neocities.org/avatar.jpg 667640

https://darch.neocities.org/avatar.png 652960

http://darch.dk/avatar.png 603210

https://social.naln1.ca/media/0c4f65a4be32ff3caf54efb60166a8c965cc6ac7c30a0efd1e51c307b087f47b.png 327947

...

But so far nothing much… Still running the search…

@abucci@anthony.buc.ci / @abucci@anthony.buc.ci Any interesting errors pop up in the server logs since the the flaw got fixed (unbounded receieveFile())? 🤔

@stigatle@yarn.stigatle.no / @abucci@anthony.buc.ci My current working theory is that there is an asshole out there that has a feed that both your pods are fetching with a multi-GB avatar URL advertised in their feed’s preamble (metadata). I’d love for you both to review this PR, and once merged, re-roll your pods and dump your respective caches and share with me using https://gist.mills.io/

@stigatle@yarn.stigatle.no Works now! 🥳

Some bad code just broke a billion Windows machines - YouTube – This is a really good accurate and comical take on what happened with this whole Crowdstrike global fuck up.

@xuu@txt.sour.is I have a theory as to why your pod was misbehaving too. I think because of the way you were building it docker build without any --build-arg VERSION= or --build-arg COMMIT= there was no version information in the built binary and bundled assets. Therefore cache busting would not work as expected. When introducing htmx and hyperscript to create a UI/UX SPA-like experience, this is when things fell apart a bit for you. I think….

I’ve been thinking about a new term I’ve come across whilst reading a book. It’s called “Complexity Budget” and I think it has relevant in lots of difficult fields. I specifically think it has a lot of relevant in the Software Industry and organizations in this field. When doing further research on this concept, I was only able find talks on complexity budget in the context of medical care, especially phychiratistic care. In this talk it was describe as, complexity:

- Complexity is confusing

- Complexity is costly

- Complexity kills

When we think of “complexity” in terms of software and software development, we have a sort-of intuitive about this right? We know when software has become too complex. We know when an organization has grown in complexity, or even a system. So we have a good intuition of the concept already.

My question to y’all is; how can we concretely think about “Complexity Budget” and define it in terms that can be leveraged and used to control the complexity of software dns ystems?

Can anyone recommend and/or vouch for a Chrome/browser extension that lets me write rewrite rules for arbitrary links on a page? e.g: s/(www\.)?youtube.com\/watch?v=([^?]+)/tubeproxy.mills.io/play/\1 for example? 🤔

@johanbove@johanbove.info Have you played with htmx at all? 🤔

Should I just code in a work-around? If the Referer is /post then consider that total bullshit, and ignore? 🤔

@bender@twtxt.net Hmmmm I’m not sure about this… 🧐 Does anyone have any other opinions that know this web/session security better than me?

👋 If y’all notice any weird quirks or UI/UX bugs of late on my pod, please let me know! 🙏 For those that have a Javascript enabled web browser will notice (hopefully) a SPA (single page app) like experience, even in Mobile! No more full page refreshes! All this without writing a single line of Javascript (let alone React or whatever) 😅 – HTMX is pretty damn cooL! 😎 #htmx

Thinking about how to programmatically manage what’s displayed on the Front page / Discover view…

Today we have the two optinos:

- Local posts only

- All posts in cache

I’m thinking of additional checkbox (on|off) options such as:

- Latest post per feed

Any other ways we can manage this a bit better? 🤔

What’s that thing called when everyone on a social media platform (hardly matters which one) all post the same sort of thing. It all sounds oh so wonderful, or all so dramatic, everyone claps and cheers and thumbs up or whatever. What’s that thing called? There’s a term for it hmmm 🧐

👋 Okay folks, let’s startup the Yarn.social calls again.

- Event: Yarn.social Online Meetup

- When: 25th May 2024 at 12:00pm UTC (midday)

- Where: Mills Meet : Yarn.social

- Cadence: 4th Saturday of every Month

Agenda:

Anything we want to talk about. Twtxt, Yarn, self hosting, cool stuff you’ve been working on. chit-chat, whatever 😅

I think multi-user pods were a mistake.

@bendwr and I discussing something along the lines of:  I.e: How to deal with or reduce noise from legacy feeds.

I.e: How to deal with or reduce noise from legacy feeds.

Been clearing out my pod a bit and blocking unwanted domains that are basically either a) just noise and/or b) are just 1-way (whose authors never reply or are otherwise unaware of the larger ecosystem)

Let me know if y’all have any other candidates you’d like me to add to the blocked domain list?

</> htmx - high power tools for html really liking the idea of htmx 🤔 If I don’t have to learn all this complicated TypeScript/React/NPM garbage, I can just write regular SSA (Server-Side-Apps) and then progressively upgrade to SPA (Single-Page-App) using htmx hmmm 🧐

Why don’t more people borrow to invest and increase their portfolio and wealth? 🤔

So what do we think of the Reddit IPO? 🤔

@bender@twtxt.net That’s what I also don’t understand. What is driving all this pierced hate and ignorance in the world lately?!

wat da fuq does being “woke” even mean?! 🤦♂️

Update on my Fibre to the Premise upgrade (FTTP). NBN installer came out last week to install the NTD and Utility box, after some umming and arring, we figured out the best place to install it. However this mean he wasn’t able to look it up to the Fibre in the pit, and required a 2nd team to come up and trench a new trench and conduit and use that to feed Fibre from the pit to the utility box.

I rang up my ISP to find out when this 2nd team was booked, only to discover to my horror and the horror of my ISP that this was booked a month out on the 2rd Feb 2024! 😱

After a nice small note from my provider to NBN, suddenly I get a phone call and message from an NBN team that do trenching to say it would be done on Saturday (today). That got completed today (despite the heavy rain).

Now all that’s left is a final NBN tech to come and hook the two fibre pieces together and “light it up”! 🥳

Feedback on why I didn’t choose Mattermost (lack of OIDC) · mattermost/mattermost · Discussion – My discussions/feedback on Mattermost’s decision to have certain useful and IMO should be standard features as paid-for features on a per-seat licensed basis. My primary argument is that if you offer a self-host(able) product and require additional features the free version does not have, you should not have to pay for a per-seat license for something you are footing the bill for in terms of Hardware/Compute and Maintenance/Support (havintg to operate it).

Hope you have a good holidays folks 👋 Merry xmas to those that celebrate that 🎅 and happy holidays 🏖️

@johanbove@johanbove.info With pygame or something else? 🤔

Day 3 of #AdventOfCode puzzle 😅

Let’s go! 🤣

Come join us! 🤗

👋 Hey you Twtxters/Yarners 👋 Let’s get a Advent of Code leaderboard going!

Join with

1093404-315fafb8and please use your usual Twtxt feed alias/name 👌

Day 2, Part 1 and Day 2, Part 2 of #AdvenOfCode all done and dusted 😅

~22h to go for the 3rd #AdventOfCode puzzle (Day 3) 😅

Come join us!

👋 Hey you Twtxters/Yarners 👋 Let’s get a Advent of Code leaderboard going!

Join with

1093404-315fafb8and please use your usual Twtxt feed alias/name 👌

Starting Advent of Code today, a day late but oh well 😅 Also going to start a Twtxt/Yarn leaderboard. Join with 1093404-315fafb8 and please use your usual Twtxt feed alias/name 👌

Bah we’ve caught COVID again 🤯

@slashdot@feeds.twtxt.net I feel like this is a bit of a common pattern? Company builds an awesome product, makes it free for a lot of users, then create additional features and paid plans, makes a tonne of money. But then later decide they need to make more money, so focus on converting the free users to paid users. Hmmm 🤔 Surely this can’t be the only viable business model? 🤔

wtf is going on with Microsoft and OpenAI of late?! LIke Microsoft bought into OpenAI for some shocking $10bn USD, then Sam Altman gor fired, now he’s been hired by Microsoft to run up a new “AI” division. wtf/! seriously?! 🤔 #Microsoft #OpenAI #Scandal

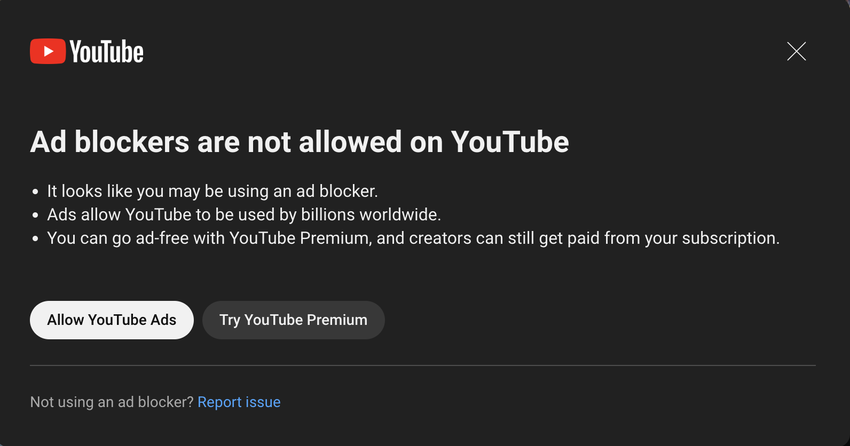

So Youtube rea really cracking down on Ad-blockers. The new popup is a warning saying you can watch 3 videos before you can watch no more. Not sure for how long. I guess my options are a) wait for the ad-blockers to catch-up b) pay for Youtube c) Stop using Youtube.

I think I’m going with c) Stop using Youtube.

The Unreasonable Effectiveness Of Plain Text - YouTube – This is very good 👌

I’m telling ya guys 😅 plex.tv had way better shit™, Get it installed on your own server, get access to free content + your own + whatever and no stupid tracking and bullshit 🤣

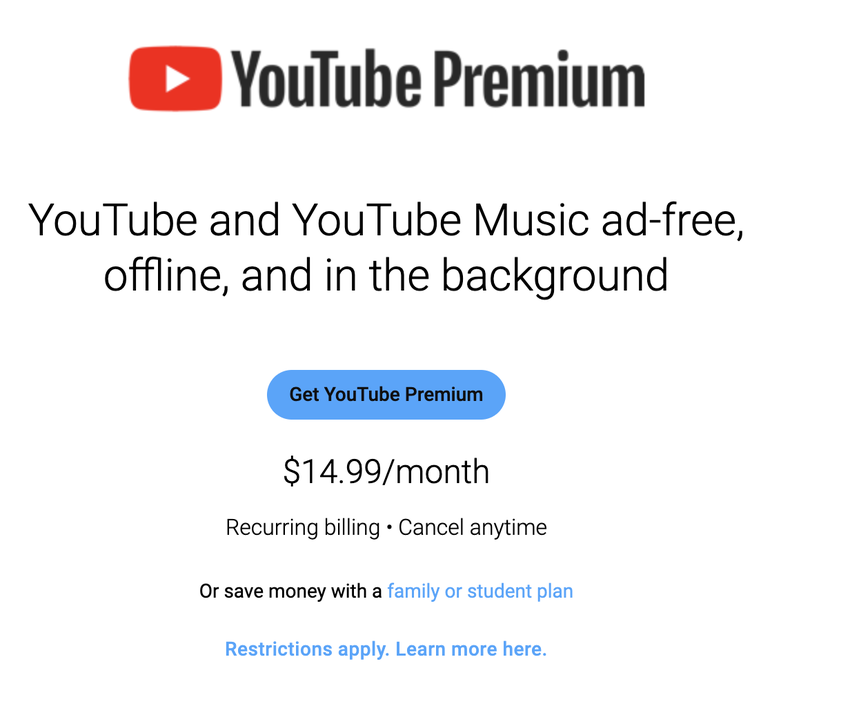

Oh okay, so Youtube is cracking down on “Ad Blockers”.  Rightio. 🤔 And paying for Youtube Premium costs $14/month?! 🤯

Rightio. 🤔 And paying for Youtube Premium costs $14/month?! 🤯  Get fucked 🤣 I guess I won’t be using Youtube anymore. #Youtube #Ads #Premium #Suck

Get fucked 🤣 I guess I won’t be using Youtube anymore. #Youtube #Ads #Premium #Suck

I added the Yarn Desktop Client and Goovy Twtxt to the landing page for Yarn.social

Need to share something with your smart phone?

qrcode "$(pbpaste)" | open -a Preview.app -f

Hmm when I said “Wireguard is kind of cool” in this twt now I’m not so sure 😢 I can’t get “stable tunnels” to freak’n stay up, survive reboots, survive random disconnections, etc. This is nuts 🤦♂️

@eapl.me@eapl.me Hmmm interesting 🤔 Your trying to use 2FA as passwords? 🤔

What if I run my Gitea Actions Runners on some Vultr VM(s) for now? At least until I get some more hardware just for a “build farm” 🤔

Just been playing around with some numbers… A typical small static website or blog could be run for $0.30-$0.40 USD/month. How does that compare with what you’re paying @mckinley@twtxt.net ? 🤔

@stigatle@yarn.stigatle.no Btw… I haven’t forgotten your ask of documenting the “Upload Media” API. I’m actually trying to work out how da fuq it even works myself 🤦♂️

Hmm need to figure out a way to squelch the size of my pod’s data directory 🤔

ASCIIFlow This is kind of cool 😅

\\--------\\

|\\ |\\

| \\------+--\\

| | | |

| | | |

| | | |

\--+------\\ |

\\\| \\\|

\\---------\|

I’ve been thinking in the back of my mind for a while now, that the Yarn.social / twtxt + ActivityPub integration was a mistake and a. bad idea. I’m starting to consider it a complete failure.

Even’n all 👋

Home | Tabby This is actually pretty cool and useful. Just tried this on my Mac locally of course and it seems to have quite good utility. What would be interesting for me would be to train it on my code and many projects 😅

Milk-V This is pretty cool! 👌 RISC-V is coming along nicely 🤞

How do you teach someone to have self confidence? 🤔

@xuu@txt.sour.is What about the Cluster on a mini ITX board with Raspberry Pi and Nvidia Jetson

Gah apparently I’ve gone and finally caught the wretched COVID virus 😢

‘You Just Lied’: Elon Musk Slaughters BBC Reporter In Live Interview - YouTube As much as I don’t hold a very high opinion of Elon Musk (and to be fair I don’t actually know him all that well, only what I’ve read about him and observed), this particular video however is quite hilarious. This (ignoring the Twitter™ nonsense) is hilariously funny and quite on point. “Who decides whether its misinformation anyway?” And “You can’t even provide one example” Haha 🤣

PS: Don’t read too much in my posting this 😅

💡 Quick ‘n Dirty prototype Yarn.social protocol/spec:

If we were to decide to write a new spec/protocol, what would it look like?

Here’s my rough draft (back of paper napkin idea):

- Feeds are JSON file(s) fetchable by standard HTTP clients over TLS

- WebFinger is used at the root of a user’s domain (or multi-user) lookup. e.g:

prologic@mills.io->https://yarn.mills.io/~prologic.json

- Feeds contain similar metadata that we’re familiar with: Nick, Avatar, Description, etc

- Feed items are signed with a ED25519 private key. That is all “posts” are cryptographically signed.

- Feed items continue to use content-addressing, but use the full Blake2b Base64 encoded hash.

- Edited feed items produce an “Edited” item so that clients can easily follow Edits.

- Deleted feed items produced a “Deleted” item so that clients can easily delete cached items.

Given the continued hostility of jam6 and buckket over Yarn’a use of Twtxt (even after several years! 😱) I am continuing to face hard decisions.

I am not sure what to do about this. 🤔 I am quite confident that the hostility and sentiment is not held by all Twtxt users past and present 😢

This is a case of a few upset purists who prefer to mock, shame and behave passive aggressively instead of contributing to a healthy discussion and ecosystem.

I am uncertain what Yarn should do here 😢

Tailscale · Best VPN Service for Secure Networks - Anyone know anything about Tailscale? Used it? Recommend it? How does it stack up in terms of actual secure networking and VPN access to your infra? Can it be trusted

I notice it uses WirGuard™ and is actually written in Go 😅

slides/go-generics.md at main - slides - Mills – I’m presenting this tomorrow at work, something I do every Wednesday to teach colleagues about Go concepts, aptly called go mills() 😅

Q: Is anyone actually finding the activitypub experimental feature I’ve been working on (for those running main) actually useful? 🤔 (because I’m not and having second thoughts…)

Imagine having this wrapped around your head 😱

@stigatle@yarn.stigatle.no I don’t think this is working very well tonight for some reason 😅

👋 Hey y’all yarners 🤗 – @darch@neotxt.dk and I have been discussing in our Weekly Yarn.social call (still ongoing… come join us! 🙏) about the experimental Yarn.social <-> Activity Pub integration/bridge I’ve been working on… And mostly whether it’s even a good idea at al, and if we should continue or not?

There are still some outstanding issues that would need to be improved if we continued this regardless

Some thoughts being discussed:

- Yarn.social pods are more of a “family”, where you invite people into your “home” or “community”

- Opening up to the “Fedivise” is potentially “uncontrolled”

- Even at a small scale (a tiny dev pod) we see activities from servers never interacted with before

- The possibility of abuse (because basically anything can POST things to your Pod now)

- Pull vs. Push model polarising models/views which whilst in theory can be made to work, should they?

Go! 👏

So who currently is running main or edge of Yarn.social’s yarnd on their pod and has activitypub and webfinger enabled? 🤔

I’ll be available on video for the next couple of hours, if anyone wants to join:

This is the GraphQL compiler complaining right? 🤔

Sometimes being in a webinar with Google™ engineers makes you feel quite dumb and that you just realise how much you don’t know 🤣

📣 Update on Activity Pub: Just a quick update on the Yarn.social <-> Activity Pub (aka Mastodon and others):

- Can follow other Activity Pub actors ✅

- Can be followed by other Activity Pub actors ✅

- Your posts can be seen by Activity Pub actors ✅

- You can see posts from Activity Pub actors ✅

What does not yet work:

- Translating replies (aka threading) ❌

Need some help with Yarn.social’s integration with Activity Pub – Specifically it appears that Mastoon servers don’t like what I’m doing somewhere 😅 Anyone able to help? 🤔

I’m online at https://meet.mills.io/call/Yarn.social if anyone wants to join 👌

@stigatle@yarn.stigatle.no The reason I was thinking about a separate binary / project / service is to bring along our Twtxt friends like @movq@www.uninformativ.de and @lyse@lyse.isobeef.org and anyone else that self-hosted their Twtxt feed on their own. But this of course has added complexities like spinning up yanrd along with whatever this thing will be called configuring the two and connecting them. Fortunately however yarnd already does this with the feeds service and defaults to using feeds.twtxt.net – So we would so something similar there too. Further thoughts? 🤔

On the topic of Programming Languages and Telemetry. I’m kind of curious… Do any of these programming language and their toolchains collect telemetry on their usage and effectively “spy” on your development?

- Python

- C

- C++

- Java

- C#

- Visual Basic

- Javascript

- SQL

- Assembly Language

- PHP

What’s with all these tech companies going through massive layoffs. The latest one is Intel, but instead they’re cutting salaries to avoid laying off.

@abucci@anthony.buc.ci Where did I hate on SQL databases? 🤔

@eldersnake@we.loveprivacy.club Several reasons:

- It’s another language to learn (SQL)

- It adds another dependency to your system

- It’s another failure mode (database blows up, scheme changes, indexs, etc)

- It increases security problems (now you have to worry about being SQL-safe)

And most of all, in my experience, it doesn’t actually solve any problems that a good key/value store can solve with good indexes and good data structures. I’m just no longer a fan, I used to use MySQL, SQLite, etc back in the day, these days, nope I wouldn’t even go anywhere near a database (for my own projects) if I can help it – It’s just another thing that can fail, another operational overhead.

I’ve never liked the idea of having everything displayed all of the time for all of history.

And I still don’t: Search and Bookmarks are better tools for this IMO.

From a technical perspective however, we will not introduce any CGO dependencies into yarnd – It makes portability harder.

Also I hate SQL 😆

@bender@twtxt.net You mean @eaplmx@twtxt.net’s reply didn’t show up in your mentions? 🤔

Hey @kdx@kdx.re What clinet are you using?

So… Just out of curiosity (again), back of paper napkin math. Based on Vultr pricing, running my infra in the “Cloud”™ would cost me upwards of $1300 per month. That’s about ~10x more than my current power bill for my entire household 😅 (10 VMs of around ~4 vCPUS and 4-6GB of RAM each + 10TB of storage on the NAS)

Anyone know what this might be about?

[1134036.271114] ata1.00: exception Emask 0x0 SAct 0x4 SErr 0x880000 action 0x6 frozen

[1134036.271478] ata1: SError: { 10B8B LinkSeq }

[1134036.271829] ata1.00: failed command: WRITE FPDMA QUEUED

[1134036.272182] ata1.00: cmd 61/20:10:e0:75:6e/00:00:11:00:00/40 tag 2 ncq 16384 out

res 40/00:01:00:4f:c2/00:00:00:00:00/00 Emask 0x4 (timeout)

[1134036.272895] ata1.00: status: { DRDY }

[1134036.273245] ata1: hard resetting link

[1134037.447033] ata1: SATA link up 6.0 Gbps (SStatus 133 SControl 300)

[1134038.747174] ata1.00: configured for UDMA/133

[1134038.747179] ata1.00: device reported invalid CHS sector 0

[1134038.747185] ata1: EH complete

restic · Backups done right! – In case no-one has used this wonderful tool restic yet, I can beyond a doubt assure you it is really quite fantastic 👌 #backups